We’re now halfway through OpenAI’s 12 Days extravaganza and on Day 6 we get something first shown in May — Advanced Voice mode with Video.

Every weekday until December 20 the AI lab is making at least one product, service or feature announcement. It is safe to say it has been a mixed bag so far.

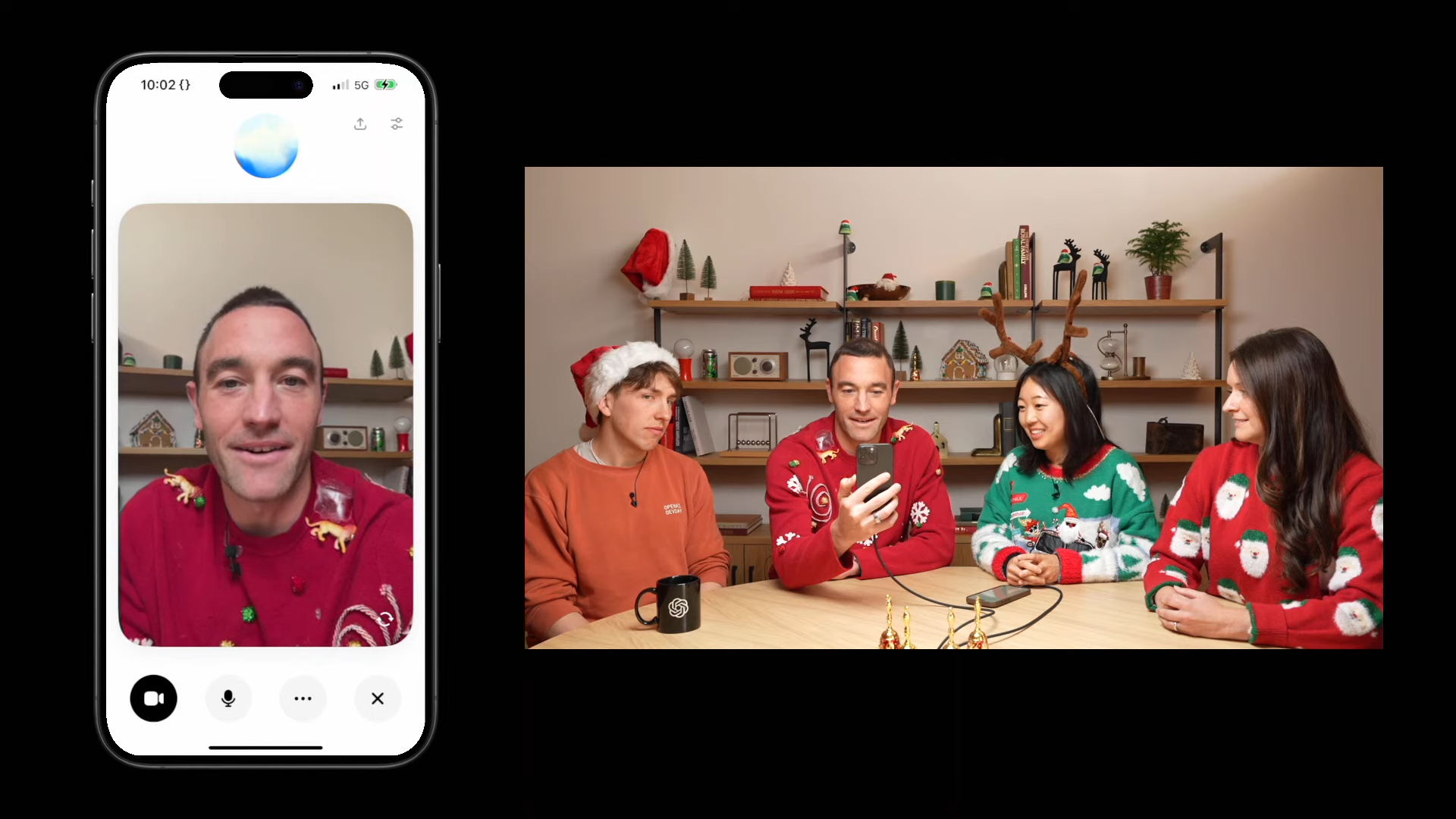

Today’s OpenAI presenters include Chief Product Officer Kevin Weil, alongside voice and vision experts Jackie Shannon, Michelle Qin, and Rowan Zellers.

ChatGPT can now see you in real-time while you are talking to the Advanced Voice mode and it seems as natural as having a video conversation with a human.

If this wasn’t enough, we’re also getting a Santa voice throughout December — and it seems to have a British accent. My four-year-old loves talking to ‘AI’ and is going to be obsessed with the Santa voice.

Video and screen share will start rolling out in the ChatGPT Mobile app starting today for Teams, Plus, and Pro subscribers everywhere but Europe.

Santa is available anywhere you can use Advanced Voice mode.

During the demo, they were able to show ChatGPT’s improved memory capabilities for video as well as speech and text. It could remember the names of the people shown on the camera even when only given a voice description.

Advanced Voice is natively multimodal so conversations are more natural in tone than other models. As well as video this will also include screen sharing, so you can show it your apps to troubleshoot problems.

This allows you to show it any app on your phone by selecting “share screen”. You could open a message and ask ChatGPT for advice on responding to a message. It is even able to identify which app you have open.

In another demo, Zellers set up a Pourover Coffee device and opened ChatGPT vision. It was able to identify the Santa hat he had on, as well as the dripper. It was then able to talk him through the process of making pour-over coffee step-by-step.

Throughout the demonstrations ChatGPT Advanced Voice maintained a natural and kind voice, adapting its tone and even laughing as if it were a human.

Advanced Voice with Vision is similar to Google’s Project Astra, updated by Google during its Gemini 2.0 announcement yesterday.

12 Days of OpenAI: The biggest announcements

- [Day Six] ChatGPT with Advanced Voice: Advanced Voice is one of the best features of ChatGPT and with this new update it can see you, the world and even your phone screen thanks to Vision.

- [Day Six] Santa voice: Throughout December ChatGPT Advanced Voice is getting a new Santa Voice. The first time you use it they’ll even reset your Advanced Voice messages to zero so you can talk for longer.

- [Day Five] ChatGPT with Apple Intelligence: Apple Intelligence got a huge update today with the release of iOS 18.2 including the inclusion of ChatGPT. This brings with it enhanced vision and text capabilities right from the Siri window.

- [Day Four] ChatGPT Canvas launch: OpenAI has finally unleashed ChatGPT Canvas, its text and code editor, to all users. It is also being made available for use with Custom GPTs and gets the ability to run Python code.

- [Day Three] OpenAI launches Sora: OpenAI’s artificial intelligence video generation tool, Sora, is official and enables you to generate videos and images in nearly any style from realistic to abstract. It’s a whole new product for the company on a separate page from ChatGPT.

- [Day Two] Fine-tuning AI models: In a roundtable, OpenAI devs focused on the power behind OpenAI’s models and reinforcement fine-tuning for AI models tailored for complex, domain-specific tasks. to make them work in specific fields like science, finance and medicine.

- [Day One] ChatGPT Pro Tier: Sam Altman and his roundtable continued the 12 Days by announcing a Pro tier of ChatGPT meant for scientific research and complex mathematical problem solving that you can get for $200 a month (this also comes with unlimited o1 use and unlimited Advanced Voice).

- [Day One] ChatGPT o1 model: OpenAI’s 12 Days of AI kicked off with a rather awkward roundtable live session where Altman and his team announced that the o1 reasoning model is now fully released and no longer in public preview.