Google today introduced its latest quantum chip — Willow (~100 qubits) — coinciding with two key achievements run on the new chip: breaking of the so-called Quantum Error Correction Threshold and posting a new benchmark for quantum performance against classical computing; Willow ran a benchmark task in five minutes that Google said would take ten septillion years (1024) on Frontier, which until a few weeks ago was the fastest supercomputer in the world.

It’s rare to catch Google in a talkative mood, but the technology giant held a media/analyst briefing before today’s announcement with handful of prominent members of the Google Quantum-AI team including Hartmut Neven, founder and lead; Michael Newman, research scientist; Julian Kelly, director of quantum hardware; and Carina Chou, director and COO. Currently, the Google Quantum-AI team has roughly 300 people with, plans for growth, and its own state of the art fabrication facility at UCSB.

This an important moment for Google’s quantum effort:

- Breaking the QEC threshold (error rate decreases as number of qubits rises) is a long-sought goal in the community and basically proves it will be possible to build large error-corrected useful quantum computer.

- The company also walked through its roadmap, discussing on technical goals (though not in great granularity) and business milestones. While the main focus is on achieving error-corrected QC fault-tolerance sometime around the end of the decade, it’s also looking at nearer term applications.

- It also discussed its broad quantum business plans which include everything from fabbing its own chips and building its own system to offering quantum services via the cloud (naturally) but also plans for potential on-premise deployments.

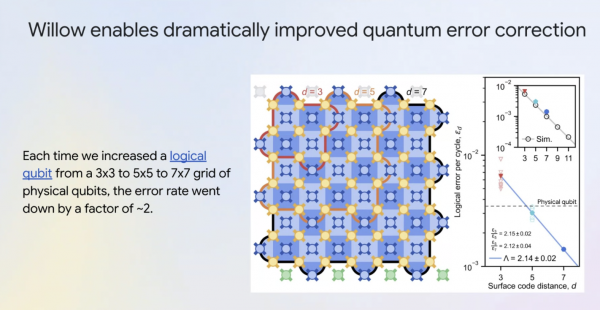

Singling out the QEC work, Neven said, “Something that happened with the Willow achievement and showing that as we went from code distance three to five to seven, and then halving the error rate each time, in essence showing exponential reduction of error rate, I feel the whole community breathes the sigh of relief because it shows that quantum error correction indeed can work in practice.”

“Now you have the building block in hand, almost ready to scale up to the large machines and all road maps was published in 2020. One thing to notice is that we are pretty much tracking it as we had laid it out. The large milestone six machine will appear somewhere around the end of the decade,” he said.

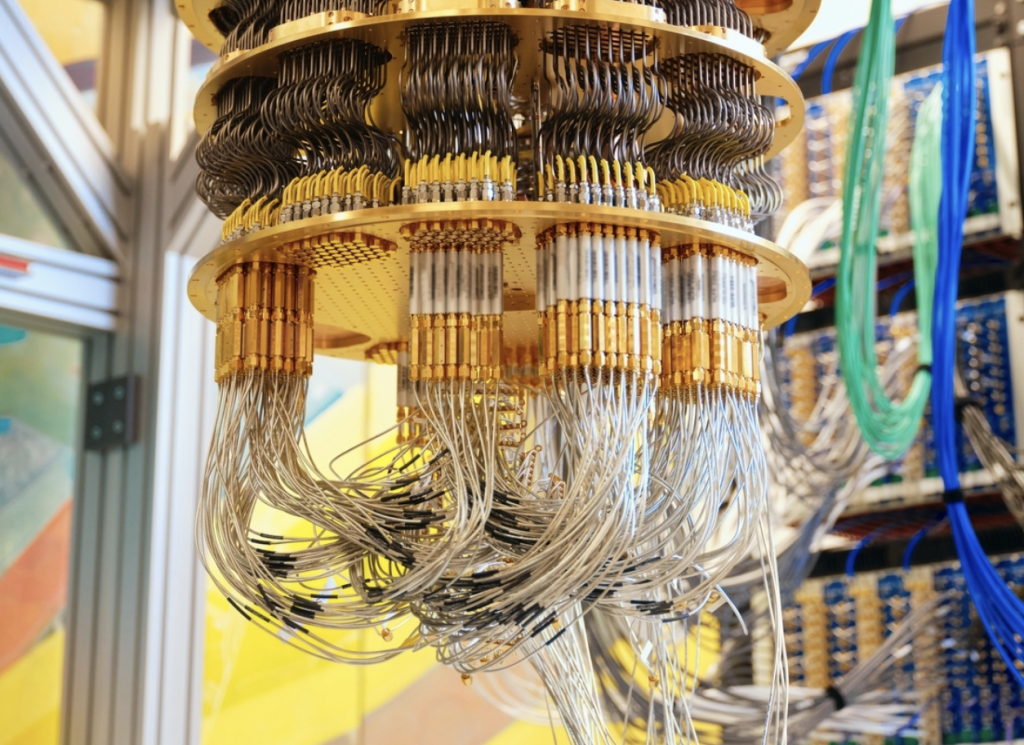

Willow Snapshot – Built for Scalability

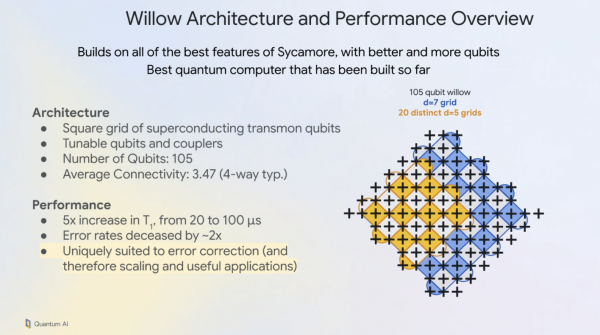

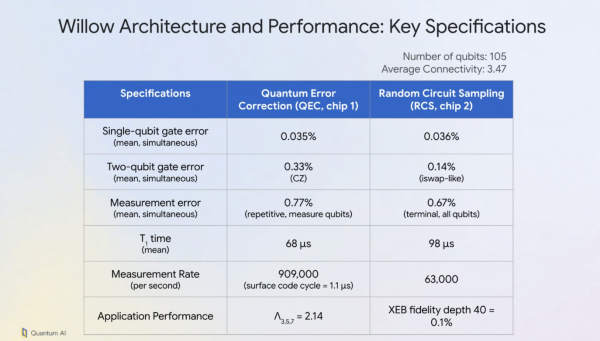

Director of quantum hardware, Kelly said, “If you’re familiar with Sycamore [Google’s earlier QPU), you can think of Willow as basically all the good things about Sycamore, only now with better qubits and more of them. We believe this the best quantum computer that’s been built so far. The architecture looks like a square grid of superconducting transmon qubits with tunable qubits and couplers. There’s 105 qubits in this grid, and the average connectivity is about three and a half, whereas typically four way connectivity at the interior of the device.

“On the performance front, importantly, we’ve been able to increase our qubit coherence times, the t1 value, by a factor of five, from 20 up to 100 microseconds. And this is really important to understand, because the previous generation of chips, Sycamore, we have done all these amazing things with, but we were pushing up against this upper performance ceiling set by the coherence times. We’ve dramatically pushed that and given us a lot of breathing room, and we see that immediately from that, our error rates are decreased by about a factor of two from our Sycamore devices and this chips are uniquely suited for error correction, therefore scaling and useful applications.”

Google said little about how it would scale to very large systems.

Asked in Q&A about networking technology, Kelly said, “What we’re focusing on, is really improving the qubit quality first and then making larger monolithic chips, which we will then link together later, as opposed to you could, for example, take chip sizes of today and then try and link them right now. We think that our approach is advantageous because it allows you to have multiple logical qubits per chip. And there’s some advantages in error correction how the costs play out for that. So our current focus is basically make the qubits really good and make the chips bigger to be able to host many physical qubits and eventually multiple logical qubits, then link them together afterwards. So we’re very interested in that (networking), but we’re not sharing anything about it today.”

Quantum Supremacy by Any Other Name

You may recall the buzz around Google’s claim to achieve quantum supremacy — the ability of a quantum computer to perform a task beyond the realistic capability of a classical systems — in 2019 (see HPCwire coverage). IBM and others took some issue with the claim. Neven took a moment to revisit the squabble.

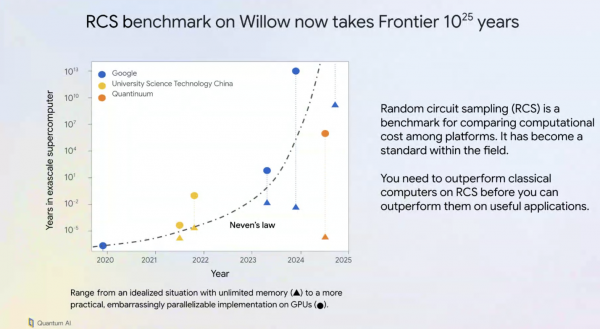

“If you followed quantum computing, the work of our team in particular, you saw that in 2019 we made bit of computer science history by showing for the very first time that there was computation, [that] took a few minutes on [Google’s Sycamore quantum chip], and the then, fastest supercomputer (Summit, ORNL), using best classical software, would have taken 10,000 years to run that benchmark. But the way we call this benchmark random circuit sampling (RCS), and it is now widely used in the field and has become somewhat of a standard,” said Neven.

“When we published this result in 2019 a little bit of a red team-blue team dynamics between classical and quantum computing, ensued, and folks were saying, oh, instead of using a huge amount of time, you could have used a huge amount of memory, or, hey, you could have used my latest favorite algorithm for quantum simulation. While these statements in principle were correct, it then took in practice, many years to bring the time of 10,000 years down. But in the meantime, our processors get better, and if you keep running random circuit sampling on the latest and greatest chip, which means you can use more qubits now and more gate operations, then the time to simulate this or run the same computation on a classical machine, shoots up at a double exponential rate, which is really a mind boggling fast trajectory. And that’s why we went from 10,000 years in 2019 to now, 10 septillion years in 2024,” he said.

Google and IBM both work with superconducting qubits and their endless fencing no doubt serves to move that technology forward for everyone.

Breaking the QEC Threshold

Demonstrating the ability to break the QEC threshold was really the biggest news of the day. Doing that required many advances in the chip itself as Google progressed from its Sycamore line to the Willow line. High-error rates, particularly in superconducting qubits, have limited their usefulness so far.

Michael Newman, Google research scientist, explained, “Quantum Information is extremely fragile. It can be disrupted by many things ranging from microscopic material defects to ionizing radiation to cosmic rays, and unfortunately, to enact these large scale quantum algorithms that have all this promise, we need to sometimes reliably manipulate this information for billions, if not trillions, of steps, whereas typically we see failures on the order of one in 1000 or one in 10,000 so we need our qubits to be almost perfect, and we can’t get there with engineering alone.”

The way to make almost perfect qubits is with quantum error correction. So the basic idea is, you take many physical qubits and have them work together to represent a single logical qubit. The physical qubits work together to correct errors, and the hope is that as you make these collections larger and larger, there’s more and more error correction. The problem is that as these things are getting larger, there’s also more opportunities for error.

“Back in the 90s, it was first proposed that, if your qubits are just good enough, if they pass some kind of magical line in the sand called the quantum error correction threshold,” said Newman. “Then as you make things bigger, as you make these groupings larger, the error rate doesn’t go up like you’d expect. It actually goes down. No one has achieved this until now. So on December 9, we’ll be publishing results in nature that show that using the willow processor, we are finally below this threshold. More specifically, as Willow uses more qubits in these groupings, the errors are suppressed exponentially quickly,” said Newman.

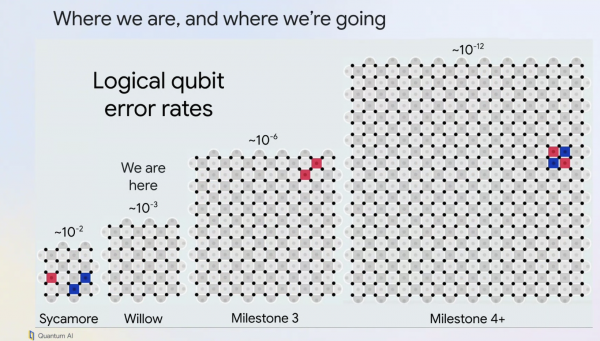

“Here we have a picture (slides below) of what these logical groupings look like, these logical qubits, which we call surface codes. So on the far left, we have our Sycamore processor that was last year, and there we got error rates of 10 to the minus two. And these red and blue blips that you’re seeing, these are detecting errors. So that’s an error happening. Now with Willow, the error rates that we’re seeing now, they’re about a factor of two better physically. So our qubits and our operations have improved by a factor of two, but our logical operations are encoded qubits, they’ve actually improved by over an order of magnitude. And this is because once you’re past this critical threshold, small improvements in your processor are exponentially amplified by quantum error correction. So as you move from the left to the right, every time you’re roughly doubling the number of qubits and doubling the performance of the chip. In the context of the milestones that Hartmut mentioned, if we were to make another step up, we can get to our milestone three, which is our long-lived logical qubit.”

Industry-wide, there’s debate over just how many physical qubits will the right size for robust logical qubits.

During Q&A, Newman said, “I would say that the general sentiment is that we were going to need about 1000 physical qubits per logical qubit in order to realize these really large-scale applications, [such as] the milestone six [roadmap] sort of level performance. But we’ve done a lot of research on the algorithms and theory side, which show that we can probably get away with something more like a couple of hundred and I think that’s roughly where it’s going to be. It probably inevitably will require on the order of a couple 100 qubits per logical qubit. So that gives you kind of an idea of if our goal is to build a million physical qubit quantum computer, divide that by a couple of hundred, and that’s how many logical qubits we anticipate to have at that time. But I think opinions differ on this.”

There are, of course, many companies working hard on developing logical qubits. Microsoft and Quantinuum, for example, recently reported a hybrid classical-quantum approach for building logical qubits.

Quantum is Useful Now

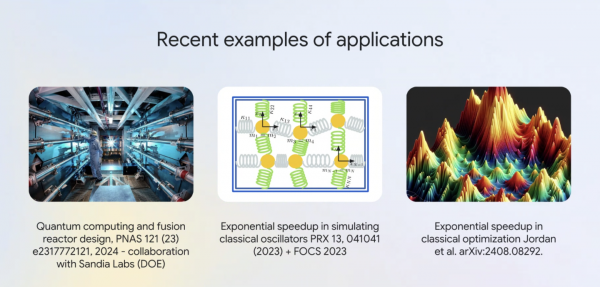

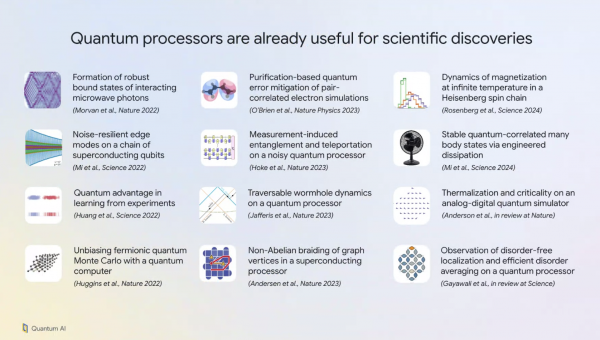

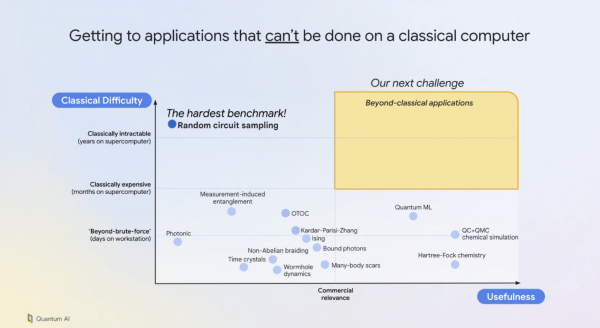

Although Google is focused on delivering fault-tolerant quantum computing, it also noted work already done with quantum processors (slides below).

Chow said, “I want to emphasize this. We’re not trying to build a quantum computer just because it’s really challenging thing to do, or it’s a fun science project. All of these things are true, but we really want to get to new capabilities that would be otherwise impossible without quantum computing. The quantum processors of today, even previous generations, like Sycamore, have been used for dozens of scientific discoveries. You can see a snapshot of some of them here on the screen, but there are many others.”

“However, there is an important challenge to note, right? Every discovery that’s been made on quantum processors to date, while exciting, could also have been done using a different tool of classical computing. So a next challenge we’re looking at is this, can we show beyond classical performance that is performance that is not possible, realistically on a classical computer, on an application with real-world impact,” she said.

“We are very much interested in offering quantum computing service that can solve real world problems that are not otherwise possible on classical computers. So just to emphasize right, none of today’s quantum computing services can offer this yet, our approach is focused on really solving the hardest technical problems, instead of jumping right ahead to scaling or commercial products. That being said, we do have early partnerships with limited number of external collaborators, both in academia and startups, also in large companies, to really help develop quantum computing systems. Look at types of applications that will be useful,” she said.

“In terms of [go-to-market] models, there is a number out there. One of the most obvious in our consideration is offering access through cloud. That is something we are currently working on. Looking beyond that, I think there are certainly opportunities to support even jobs running at Google, as well as potentially in larger, very specific on-prem or other types of models, so TBD on those, but we are currently working on cloud.”

Neven quickly added, “The Willow chip is a nice piece of hardware, and it has enormous raw compute power. So we have ambitions to show that already, with such a platform, you may be able to do useful algorithms. That’s not the conversation for this press round table, but we take an IOU and watch us over the next year.”

Stay tuned.

Link to blog, https://blog.google/technology/research/google-willow-quantum-chip/

Link to Nature paper, https://www.nature.com/articles/s41586-024-08449-y