Apple Intelligence is forming right before our eyes. This week, Apple shipped the iOS 18.1 dev beta, which incorporates more than a dozen Apple Intellience updates across Phone, Notes, Photos, Mail, Writing, and Siri. Even at this early stage, it’s clear that Apple’s brand of generative artificial intelligence could change how you use your best iPhone.

First, a big caveat. iOS 18.1 dev beta is not for everyone. The name should give you a clue: this is for developers and intended as a way for them to familiarize themselves with the updated platform and its new Apple Intelligence capabilities. It’ll help them understand how their own apps can tap into upcoming iPhone on-device (and Private Computer Cloud-protected off-device) generative AI capabilities. This is not a software update for consumers, especially since it’s unfinished and not always stable.

It’s also incomplete. Areas of Apple Intelligence that are still to come in future betas and updates that will arrive sometime this year and – yes – next year include any kind of generative AI image creation (including Genmojis), photo editing, support for languages besides English (US), ChatGPT integration, Priority Notifications, and Siri’s ability to add in personal context and control in-app actions (it can already control native device capabilities, though).

I’ve been playing with it because it’s my job, and, yes, I can’t help myself. This is our first opportunity to see how Apple’s AI promises to translate into reality in Apple Intelligence.

Siri reimagined

Is it possible for a feature to be both bold and subtle? Siri’s Apple Intelligence glow-up in the iOS 18.1 dev beta removes the Siri orb and, at first glance, appears to replace it with the most subtle translucent bar at the bottom of the screen. On my iPhone 15 Pro, I can bring up Siri by saying its name, “Siri,” and through a double tap at the bottom of the screen.

When I do the latter, I see a mostly see-through bar pulse at the bottom of the display, which indicates Siri is listening. However, if I don’t touch the screen and just say, “Siri” or “Hey Siri,” I see a multi-colored wave pulse through the entire screen with the bezel glowing brightly for as long as Siri is listening. I can also enable the same effect by long-pressing the power button.

Obviously, the new look is exciting and quite probably subject to change. Perhaps more interesting are some of the new Apple Intelligence-infused capabilities that arrive with this early dev beta.

In my experience, the new Siri is already better at remembering context. I’ve asked about locations and then dug into details about them without having to restate the state or city. This could be a time and frustration saver.

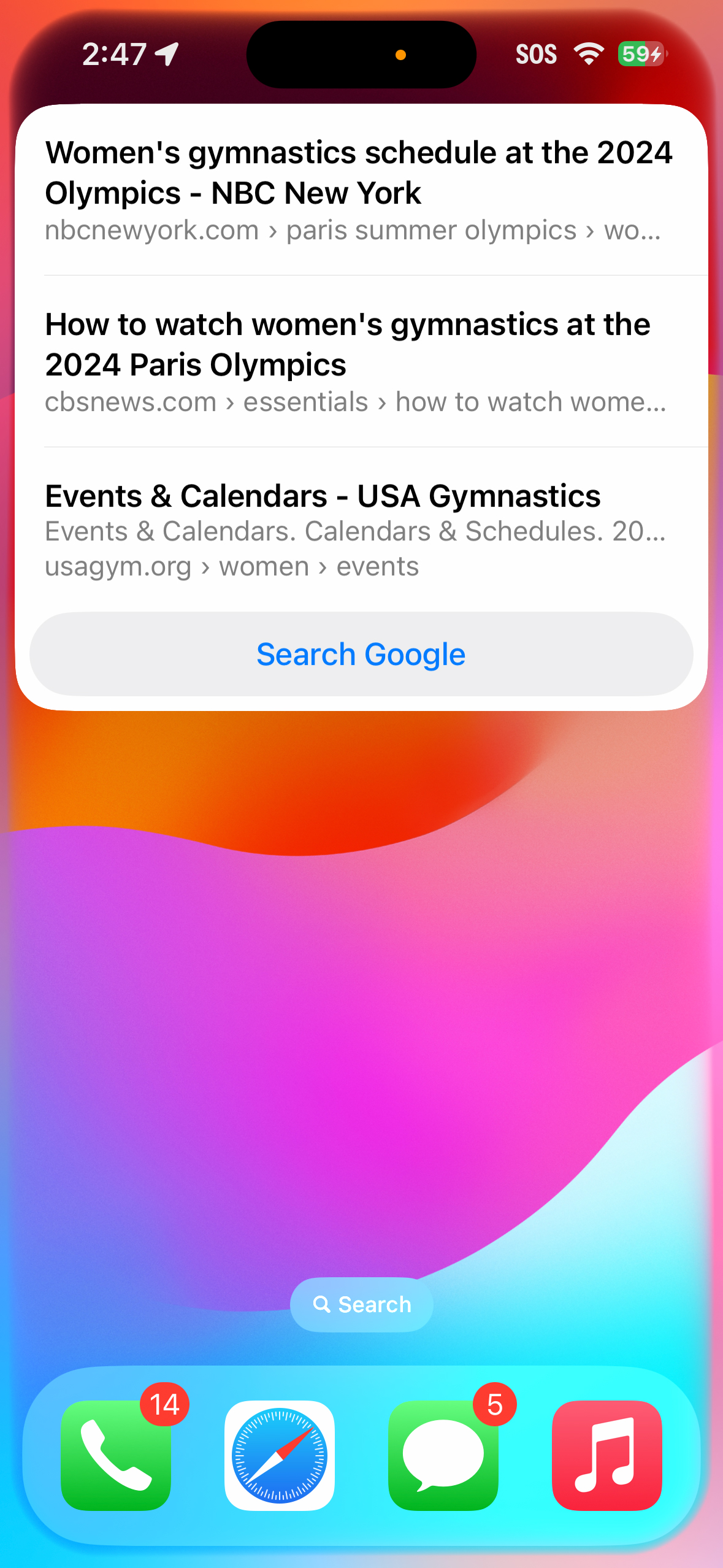

While exploring the iOS 18.1 dev beta, I noticed the subtlety of Apple Intelligence’s integration. In Text to Siri, for instance, there’s no obvious indicator of where it lives or how to activate this type-only Siri interaction. But it also makes sense to me. When I want Siri’s help but have no interest or ability (I’m not alone) to speak my prompt, typing it in could be quite helpful.

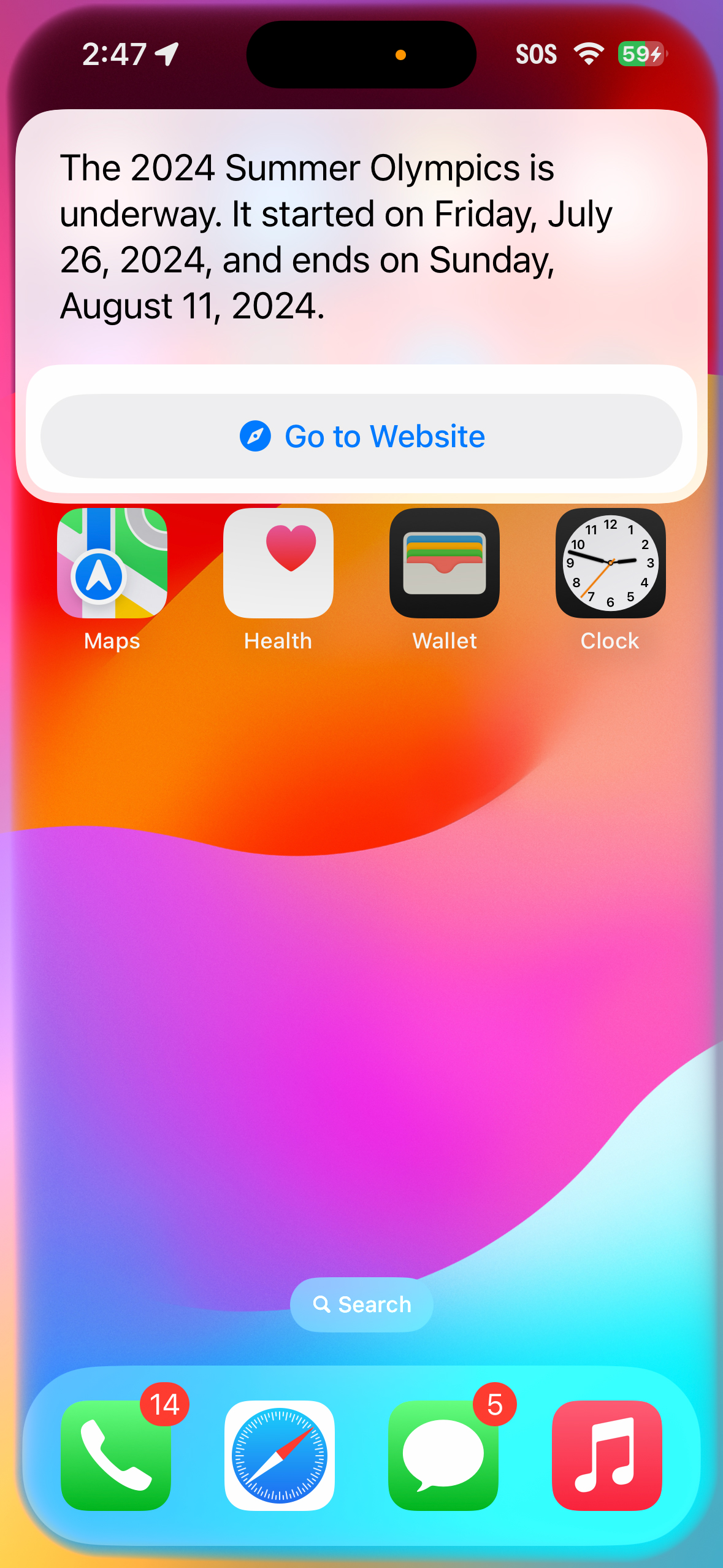

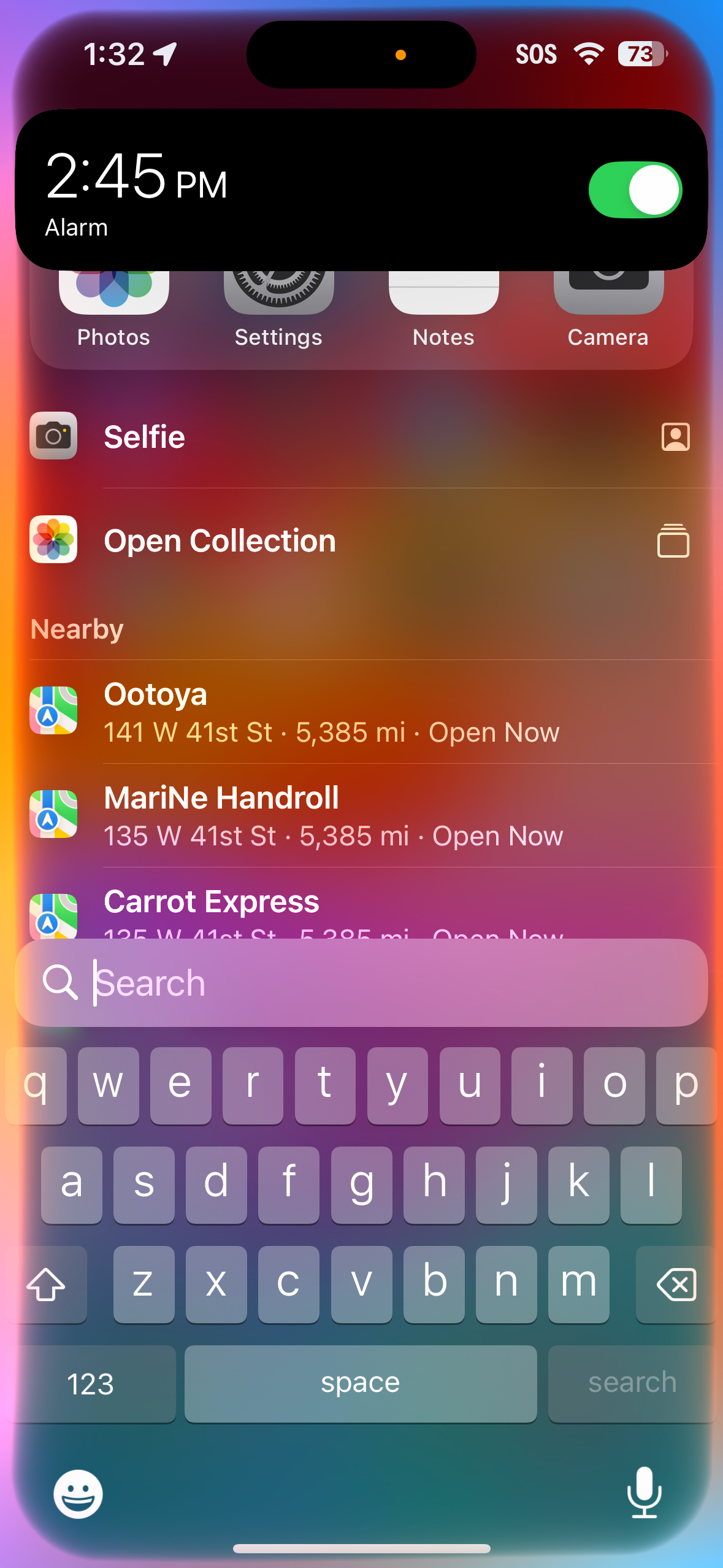

To use Type to Siri, I just double-tapped at the bottom of my iPhone screen, and a prompt box popped up with the words “Ask Siri…”. When I asked it to “Set an alarm for 2:45 PM,” it did it just as wordlessly as I had typed it in. In fact, Siri didn’t tell me that the alarm was set, but when I checked by asking Siri out loud to “Show me my alarms” it showed me it was set.

You’ve got AI mail

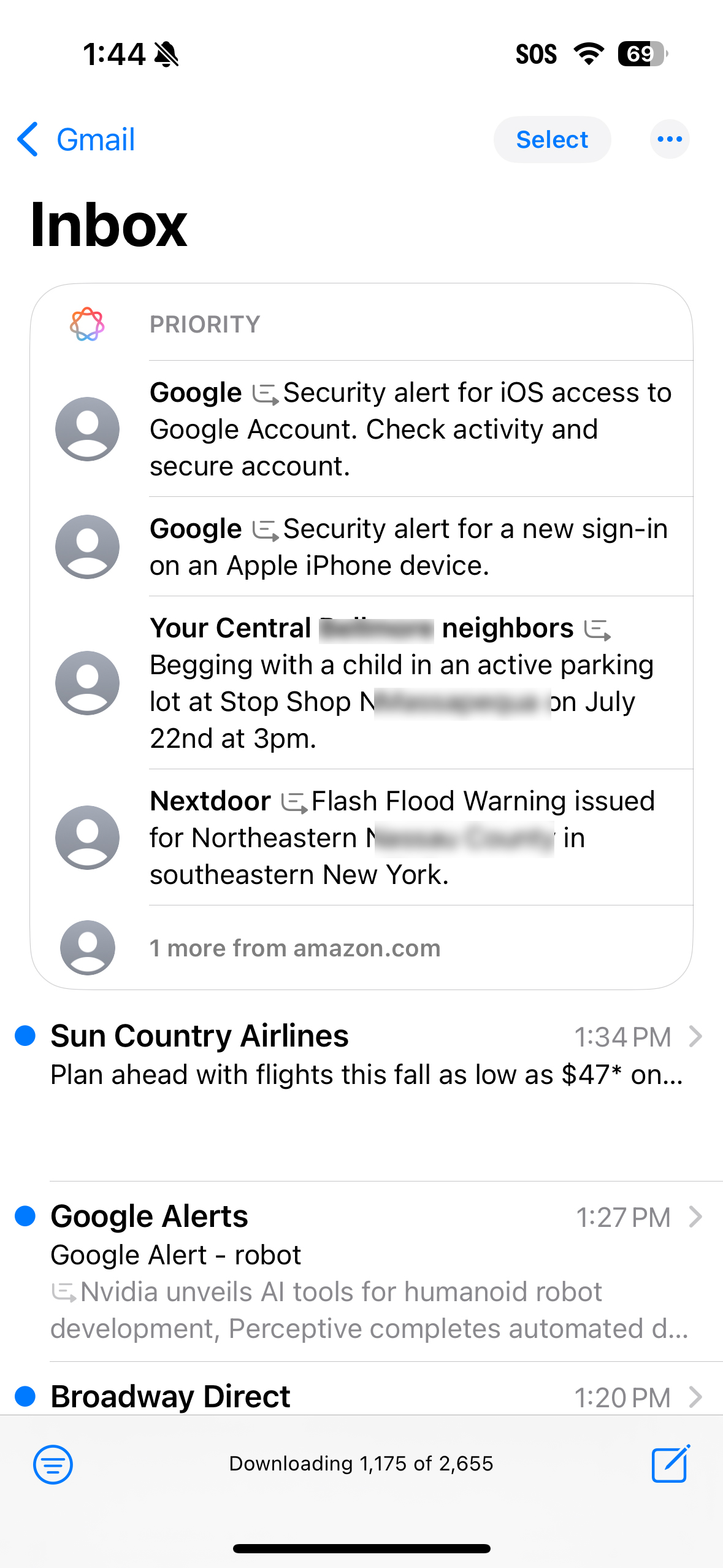

“Woah.” That was my honest reaction when I saw the new Apple Intelligence-powered Priority Section sitting at the top of my email box. It had everything from security alerts that I’d already addressed to some Nextdoor Neighbor alerts that might truly be a priority for me based on their utter weirdness.

I found I could open any of the emails directly from that box, but there’s currently no way to swipe one away as you can with regular email, but I guess then that’s the point of “priority.”

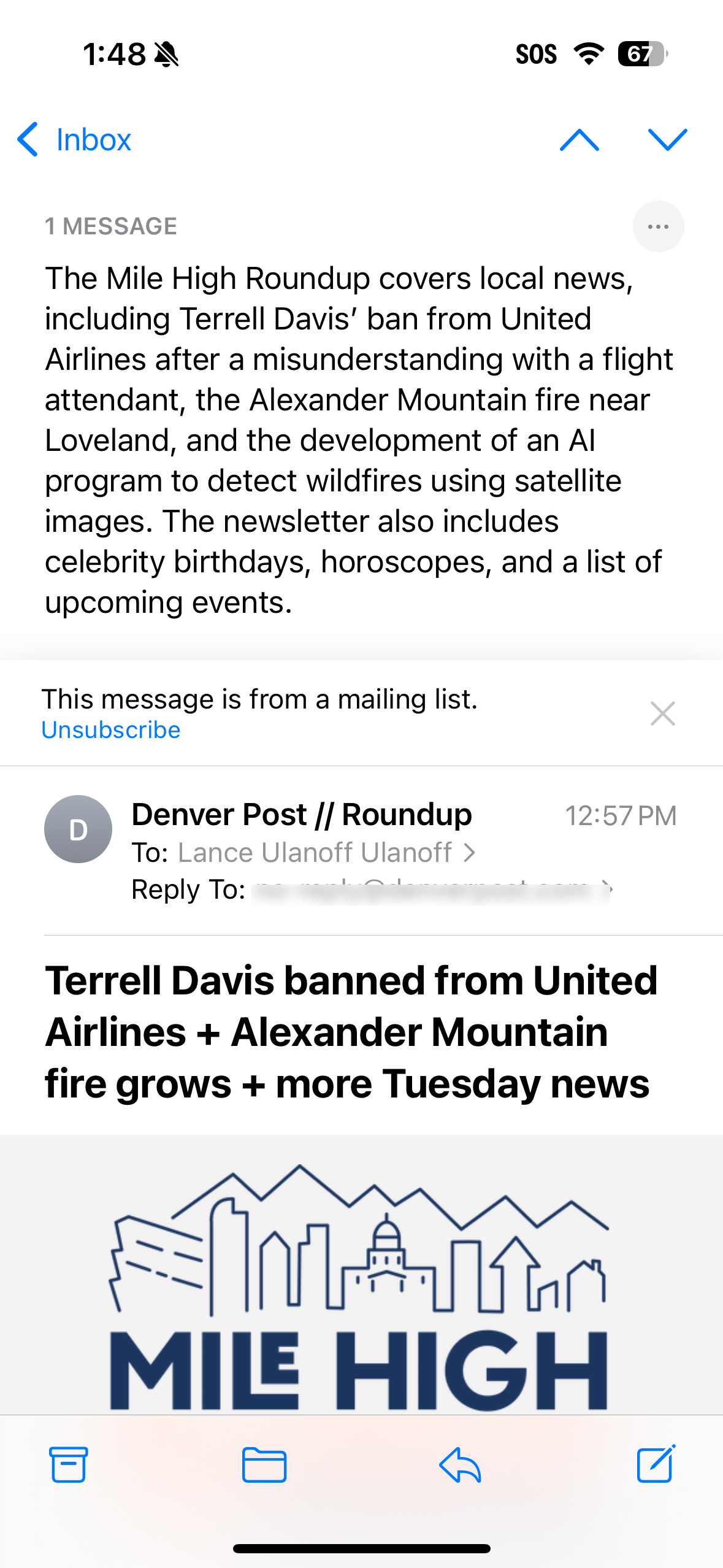

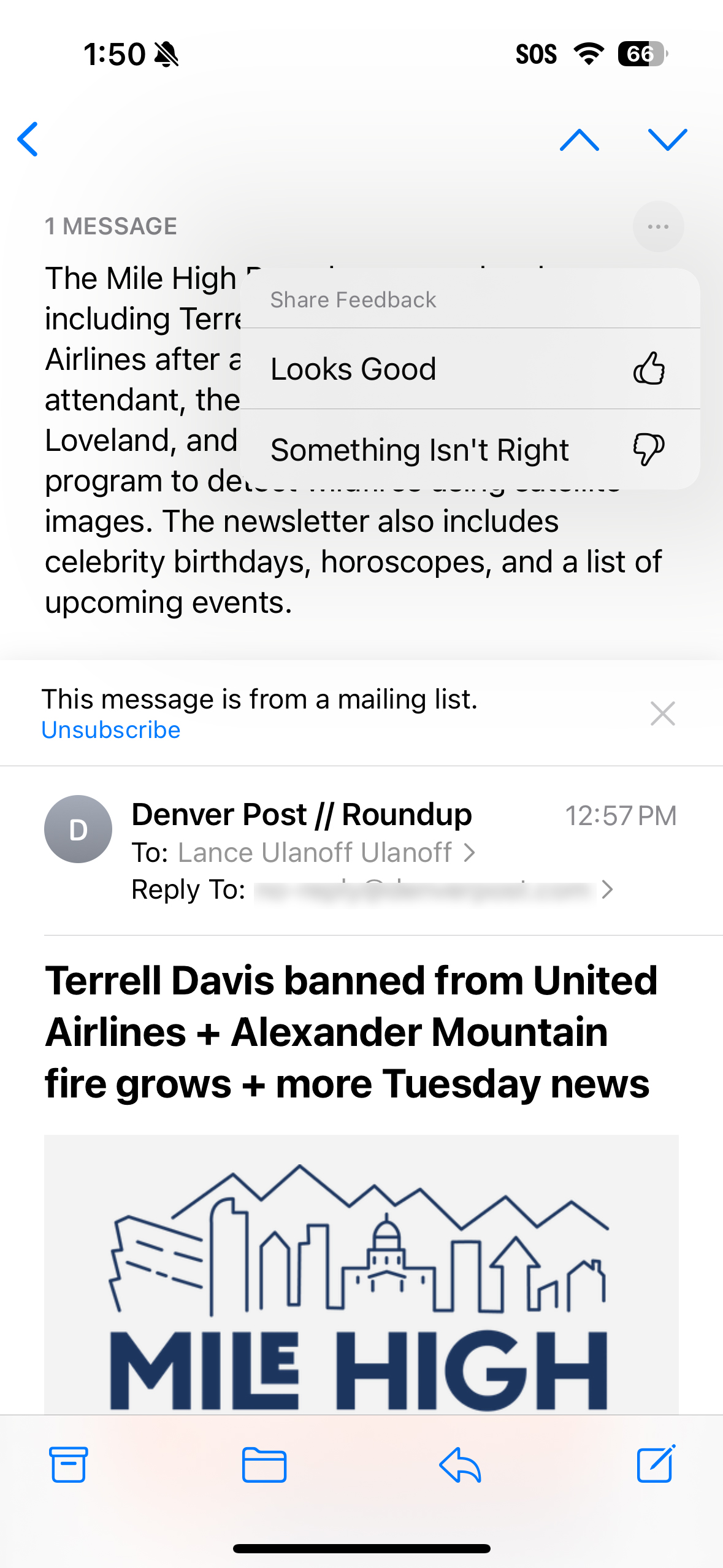

The Summarize tool is pretty good and gave me brief, precise boil-downs for all kinds of emails. I mostly used it on lengthy newsletters. I think the summaries were accurate, though I could report to Apple with a thumbs down if they were not. I’m betting that people will come to use these quite often and may no longer read long emails. Another potential time saver or possible avenue for missing nuance. Guess we’ll see.

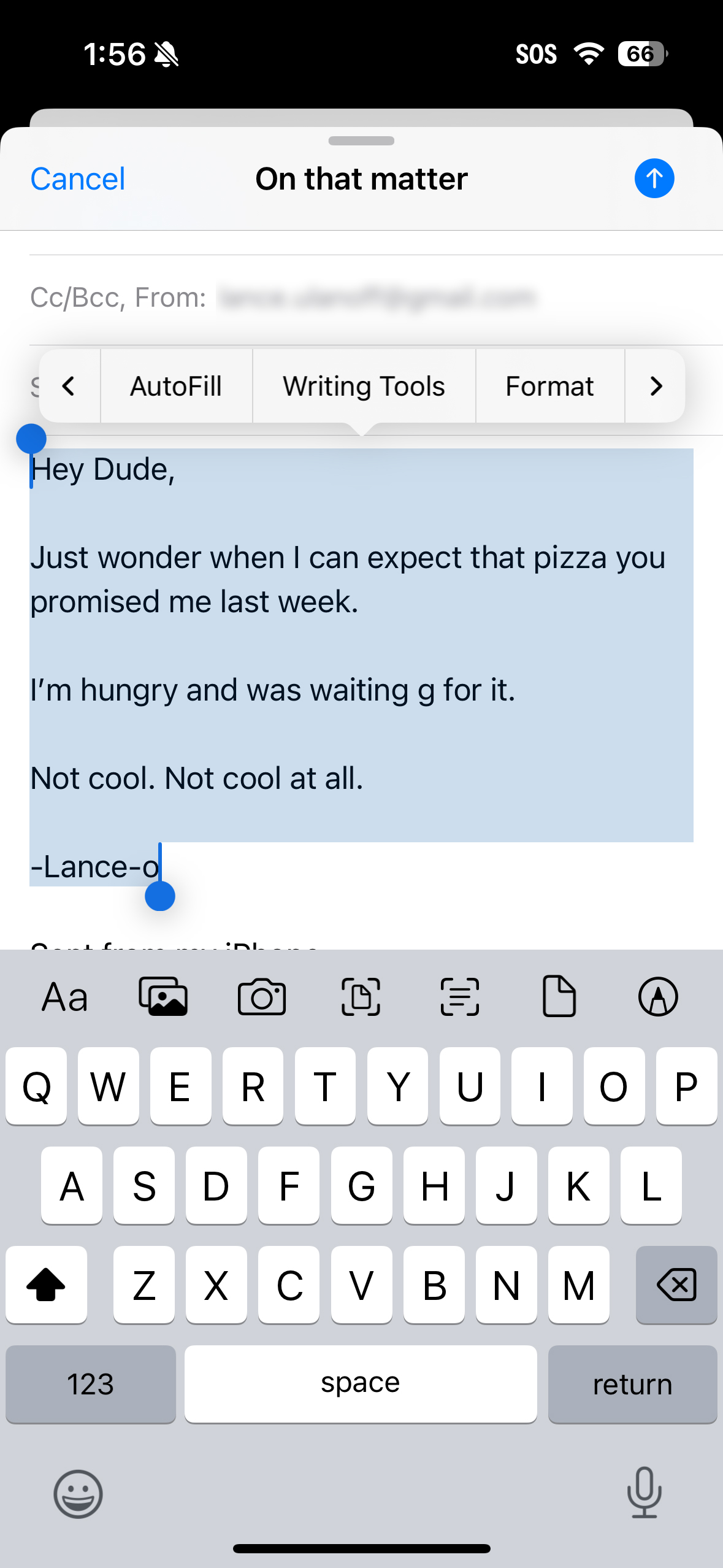

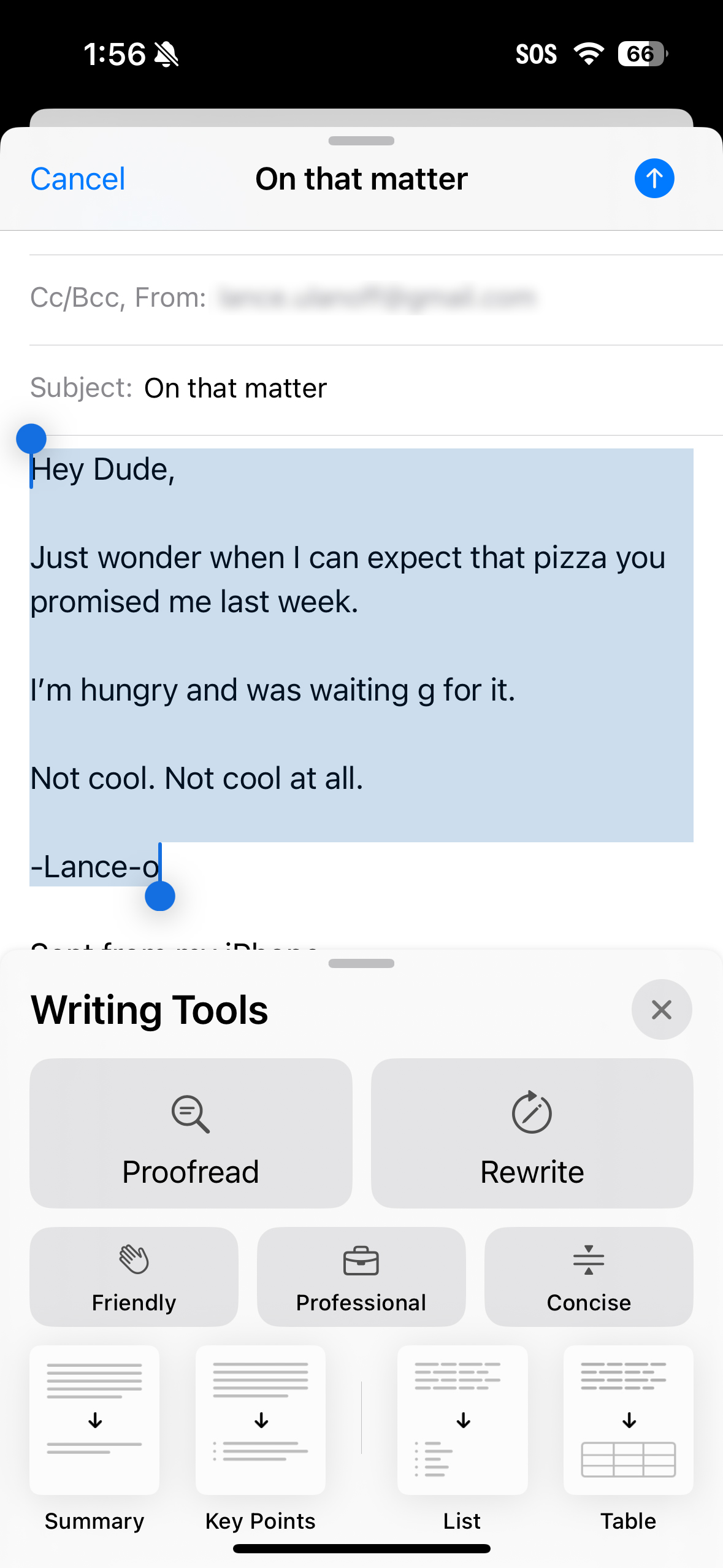

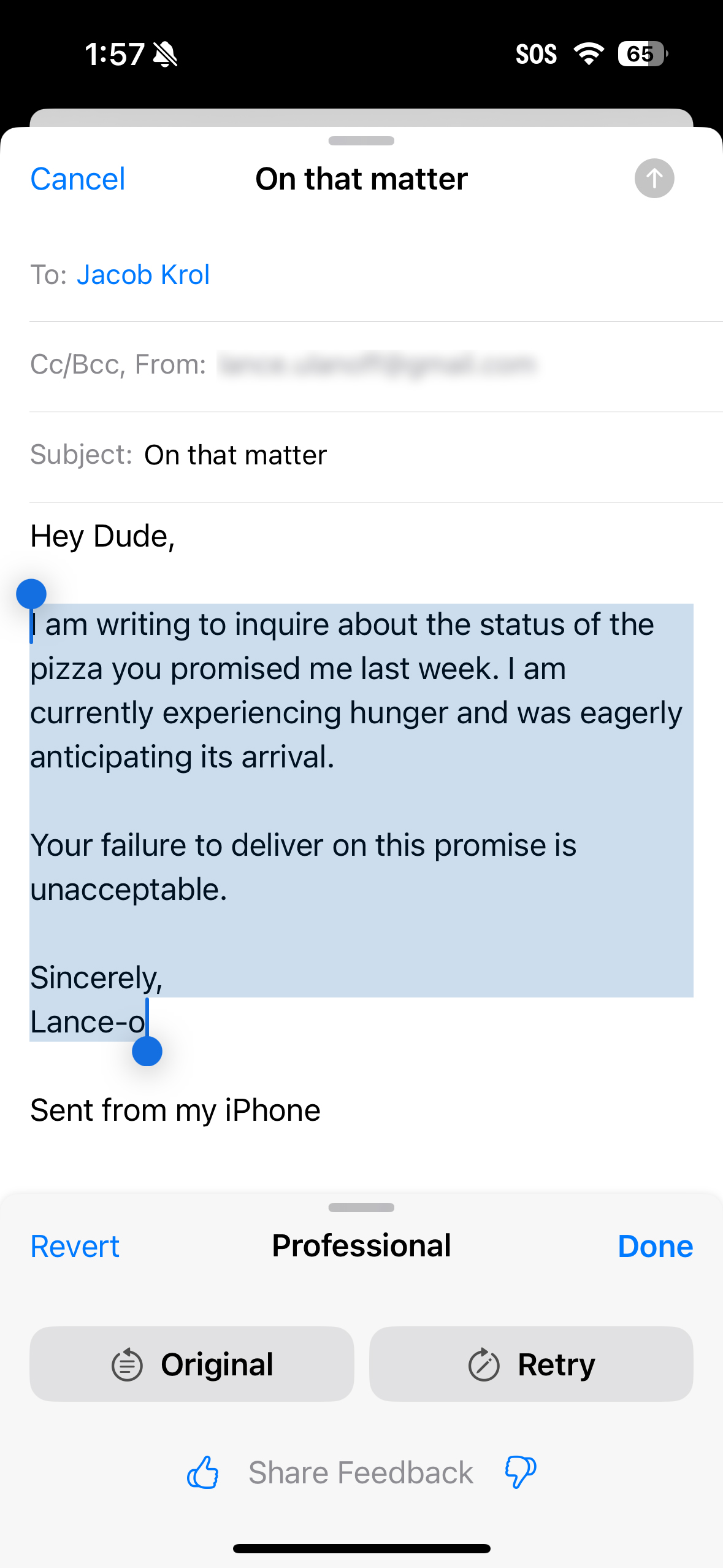

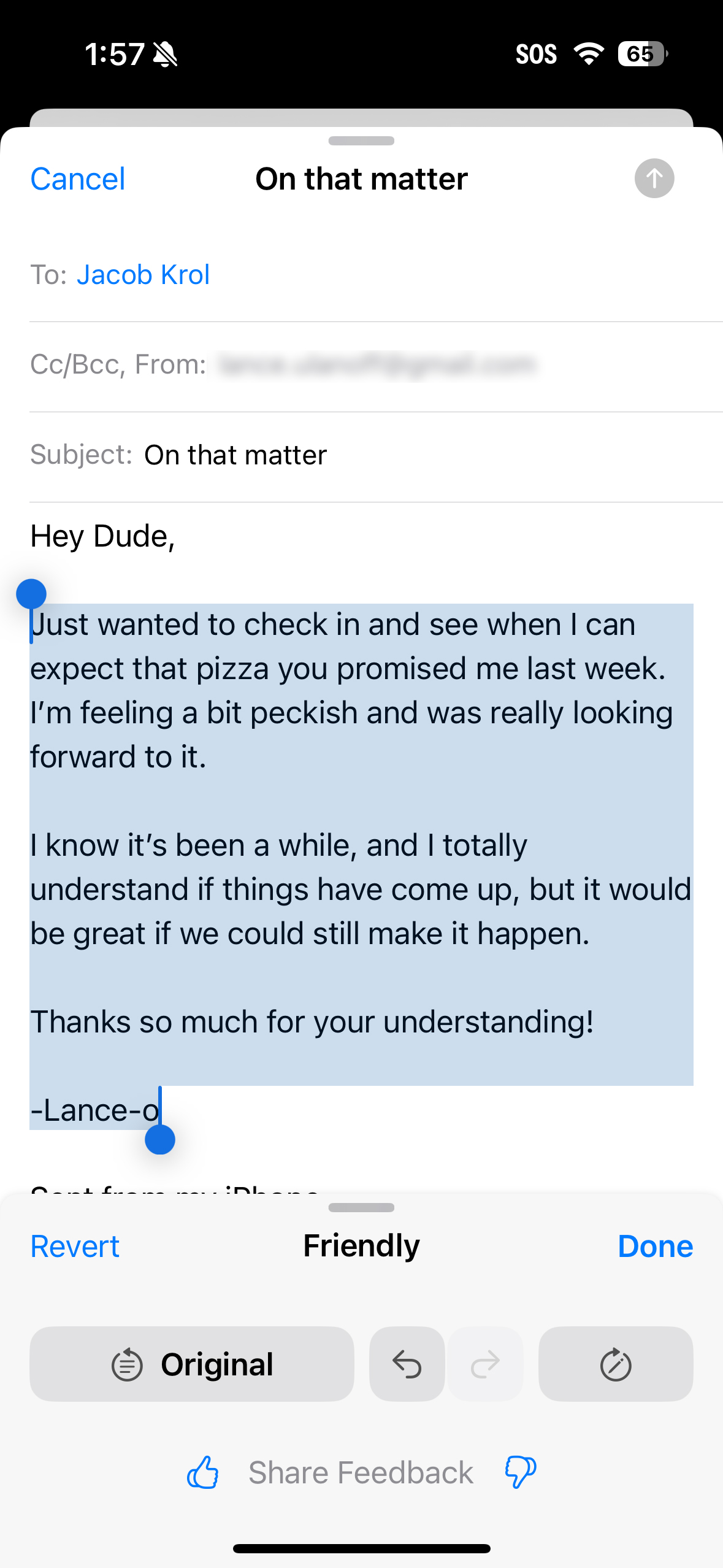

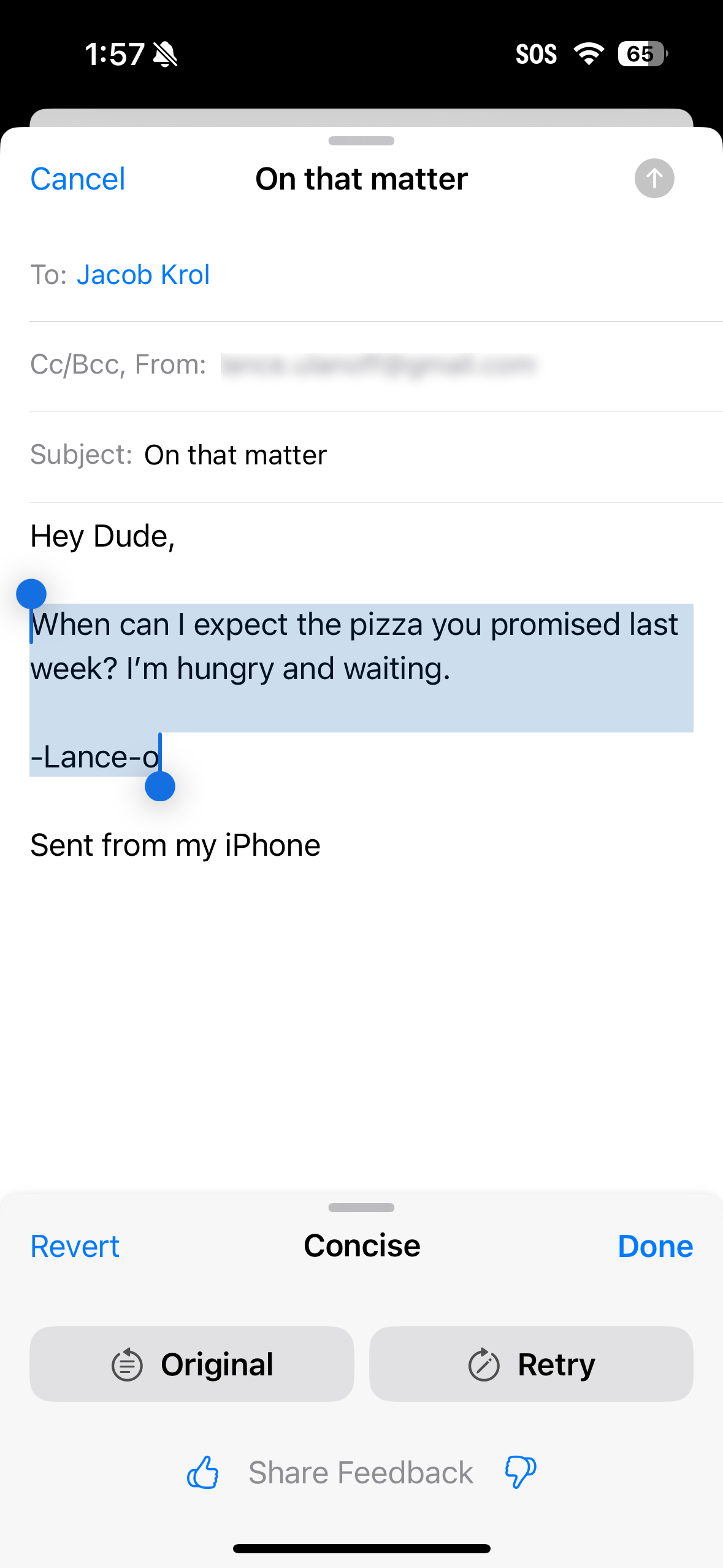

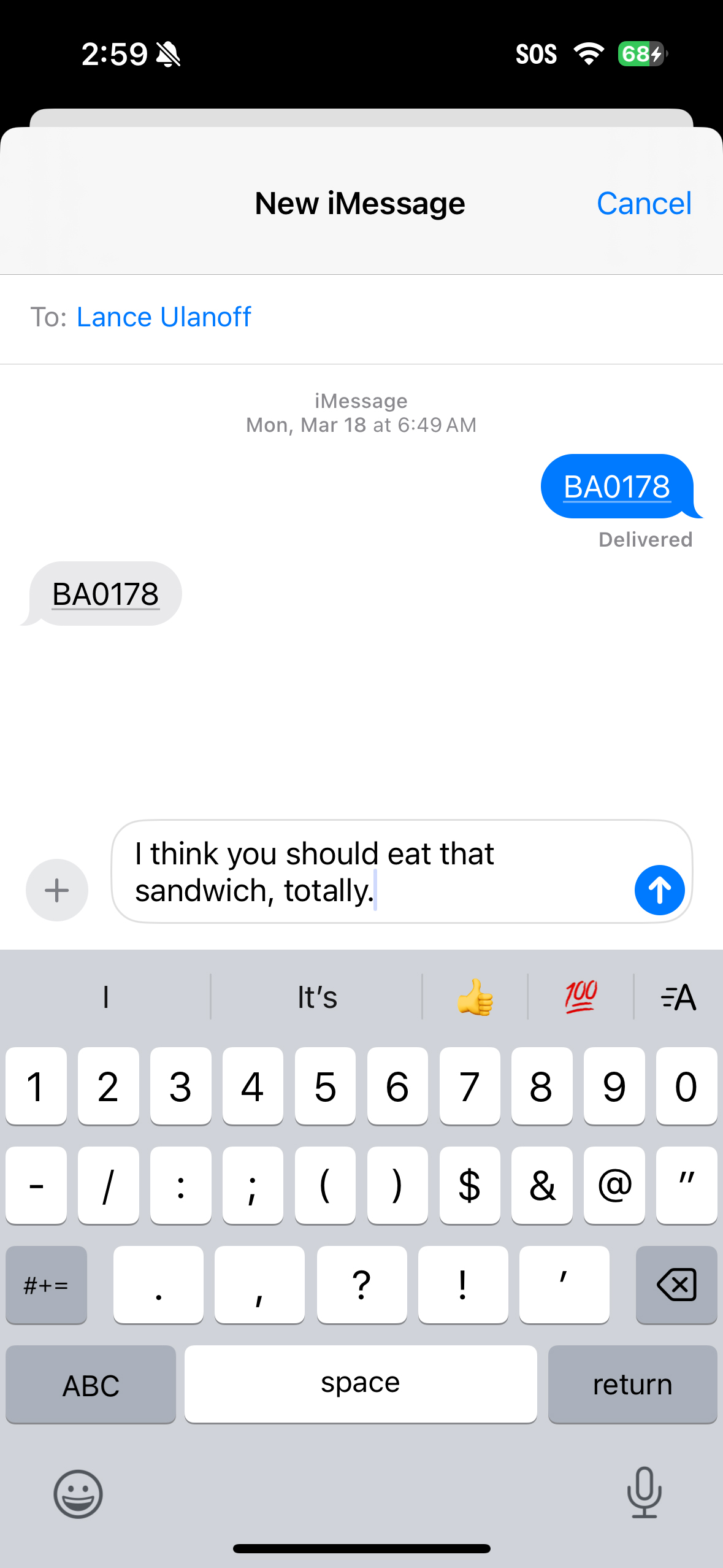

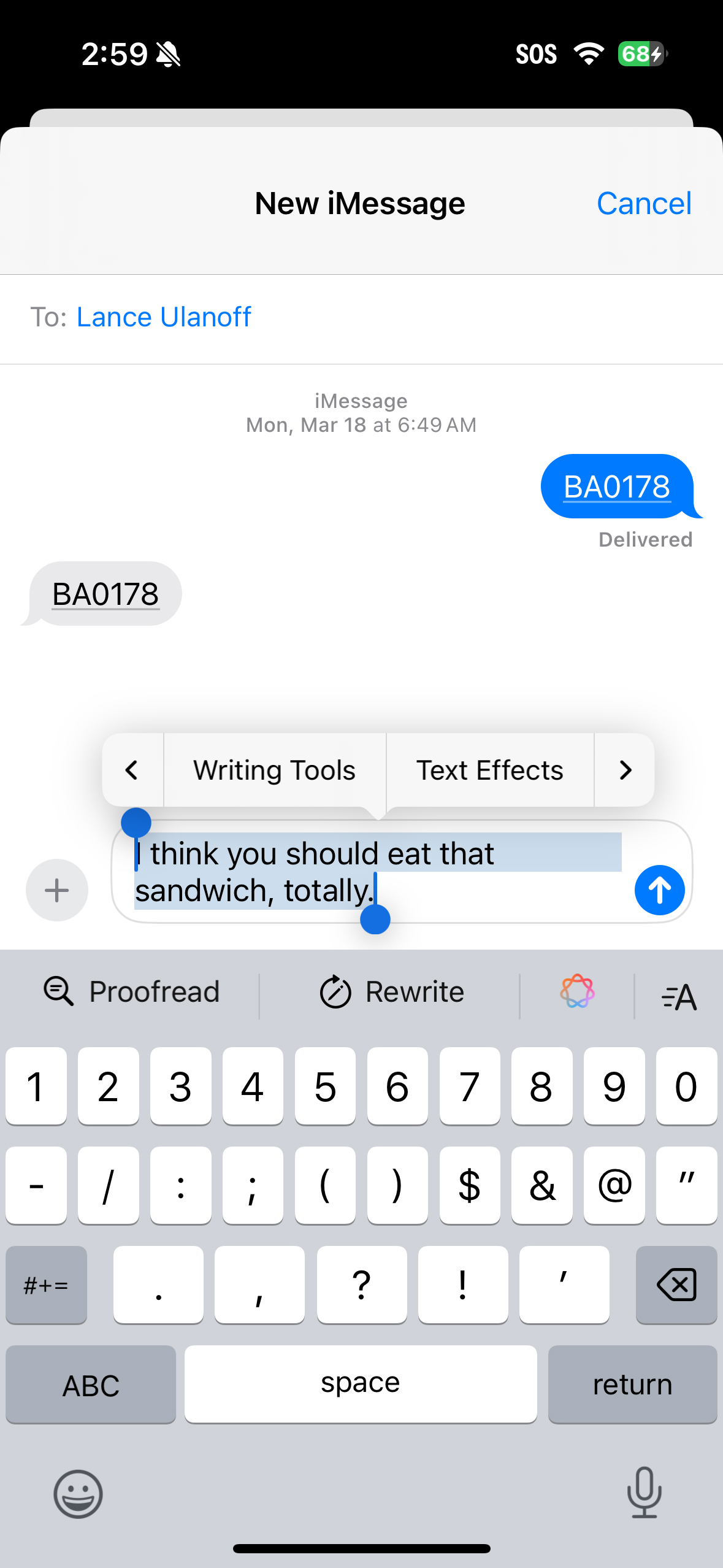

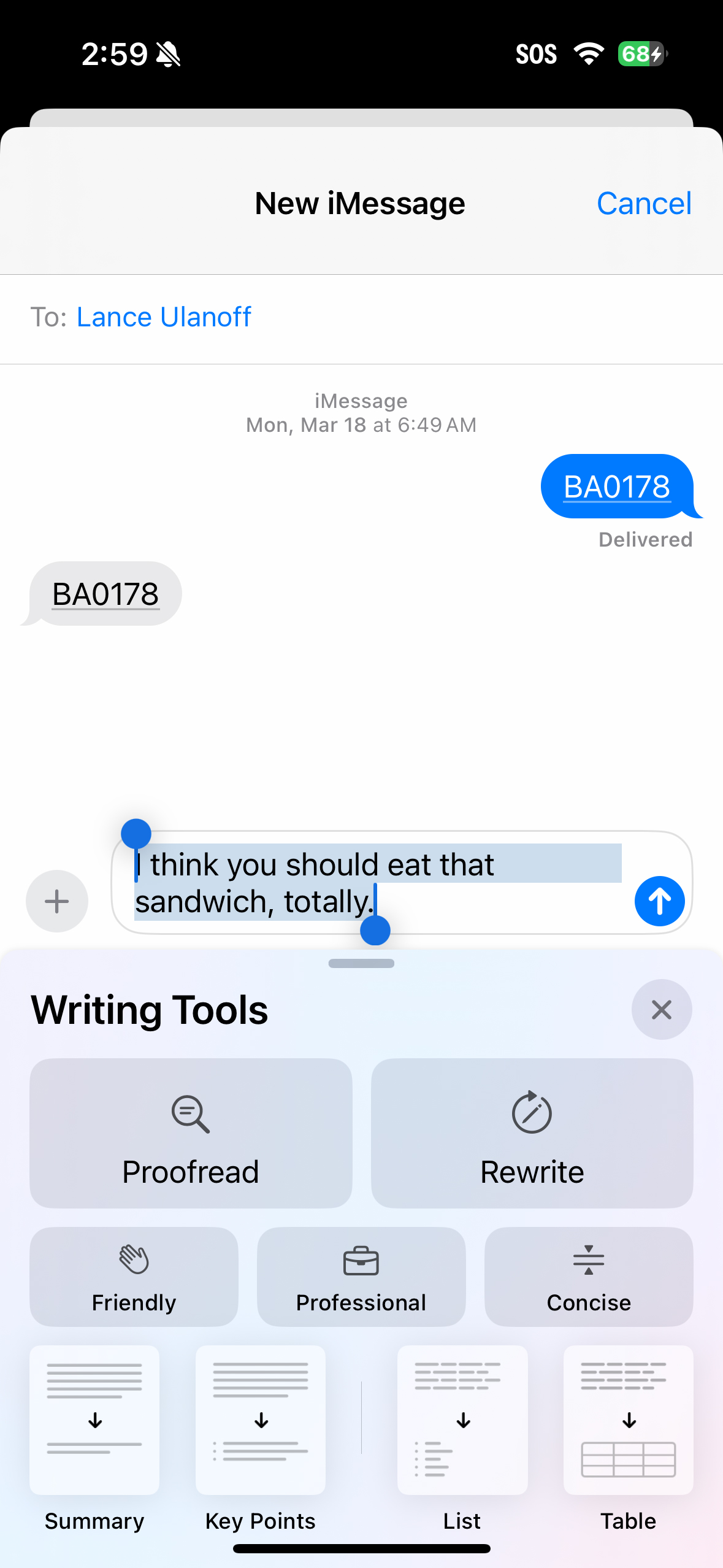

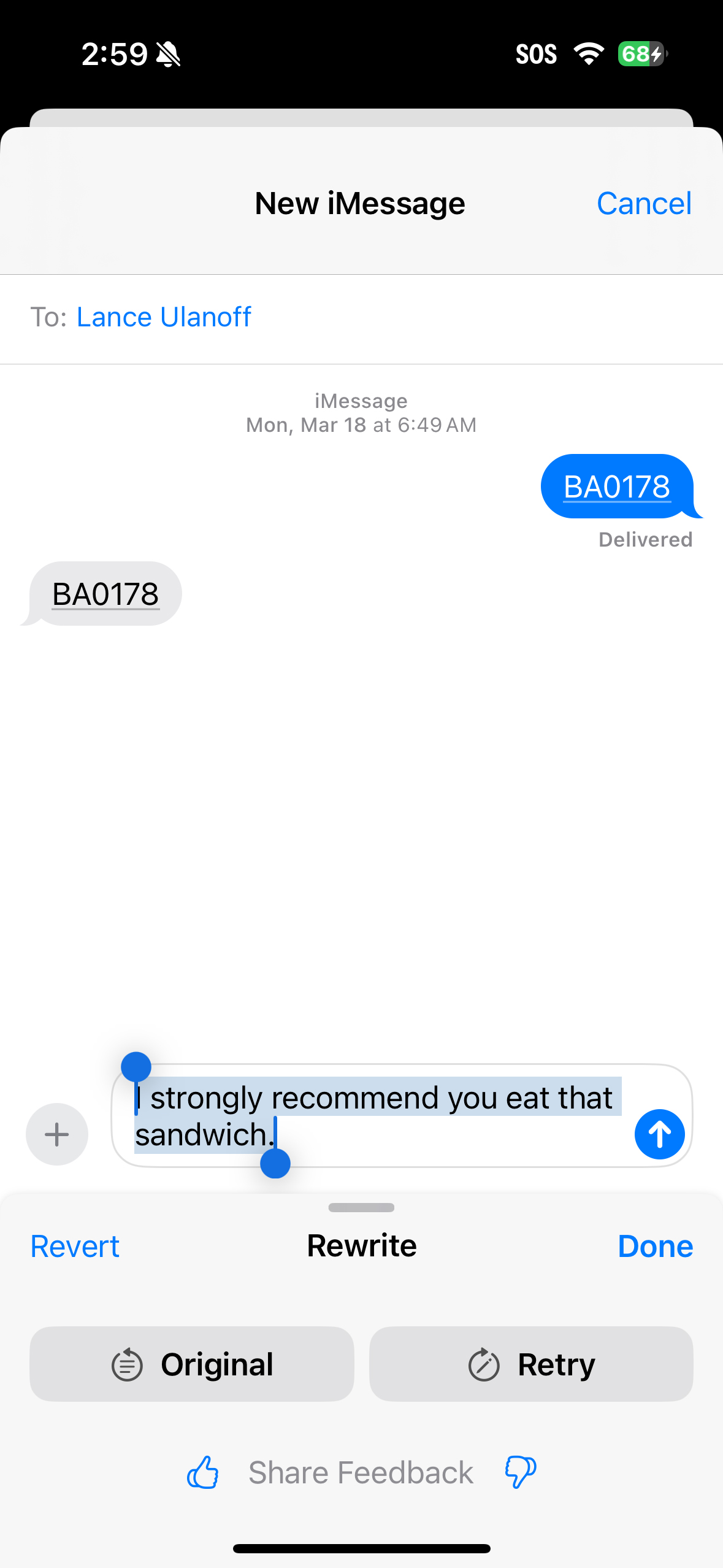

My favorite new Apple Intelligence feature, though, may be the Writing Tools. Sure, it’s a bit hidden under copy and paste, but it is already fast, powerful, and kind of fun.

I wrote a silly, poorly worded email to a coworker about a missing pizza, selected the text, and then slid over to the Writing Tools under Copy and Paste. The menu offers big buttons to proofread or rewrite and quick shortcuts to tones of voice: Friendly, Professional, or Concise. I can even get a summary or list of key points from my email. This email was brief, though, so I just needed the reinterpretation; they were spot on and a little funny, but that’s because I wrote the original silly email.

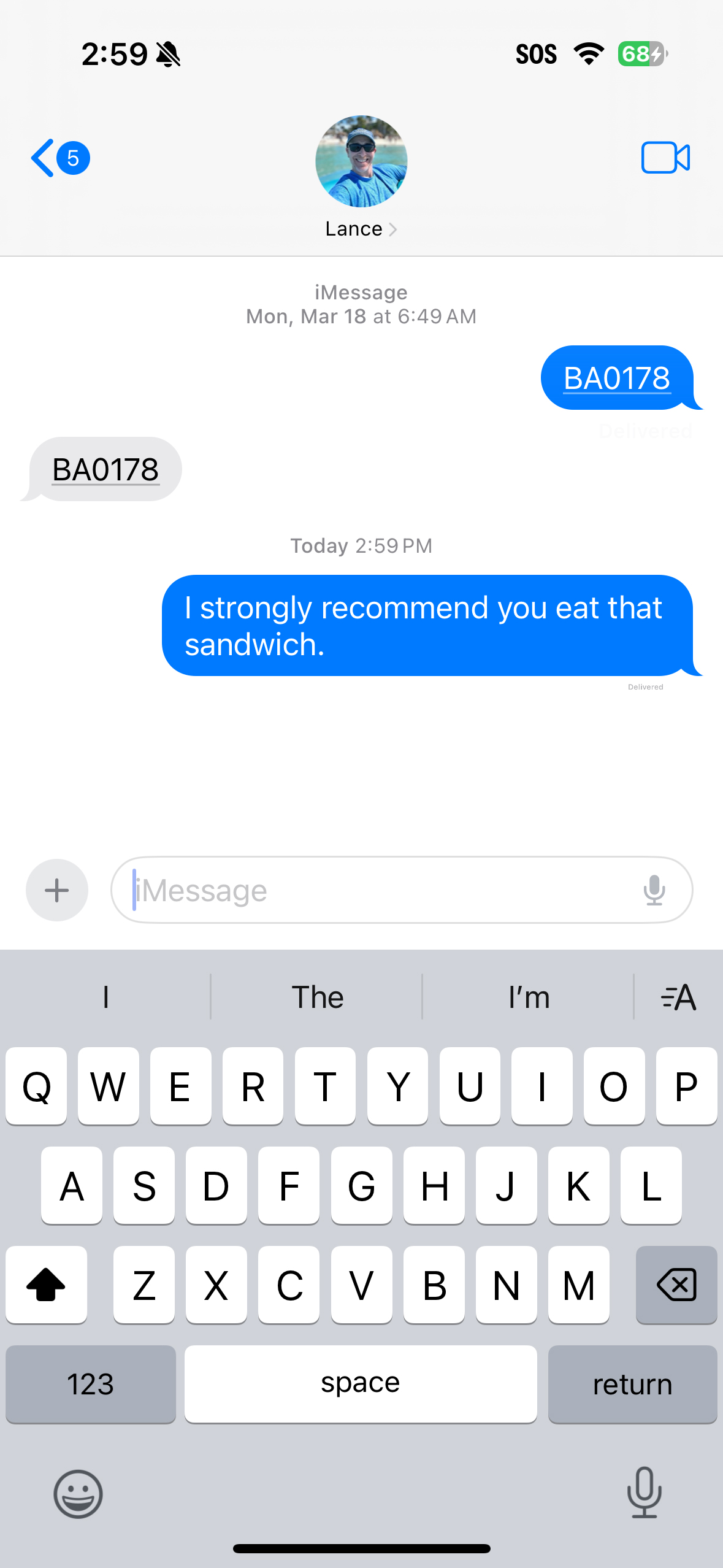

These tools work well in real-time conversations in iMessages, where I used them to ensure the right tone of voice.

A smarter phone

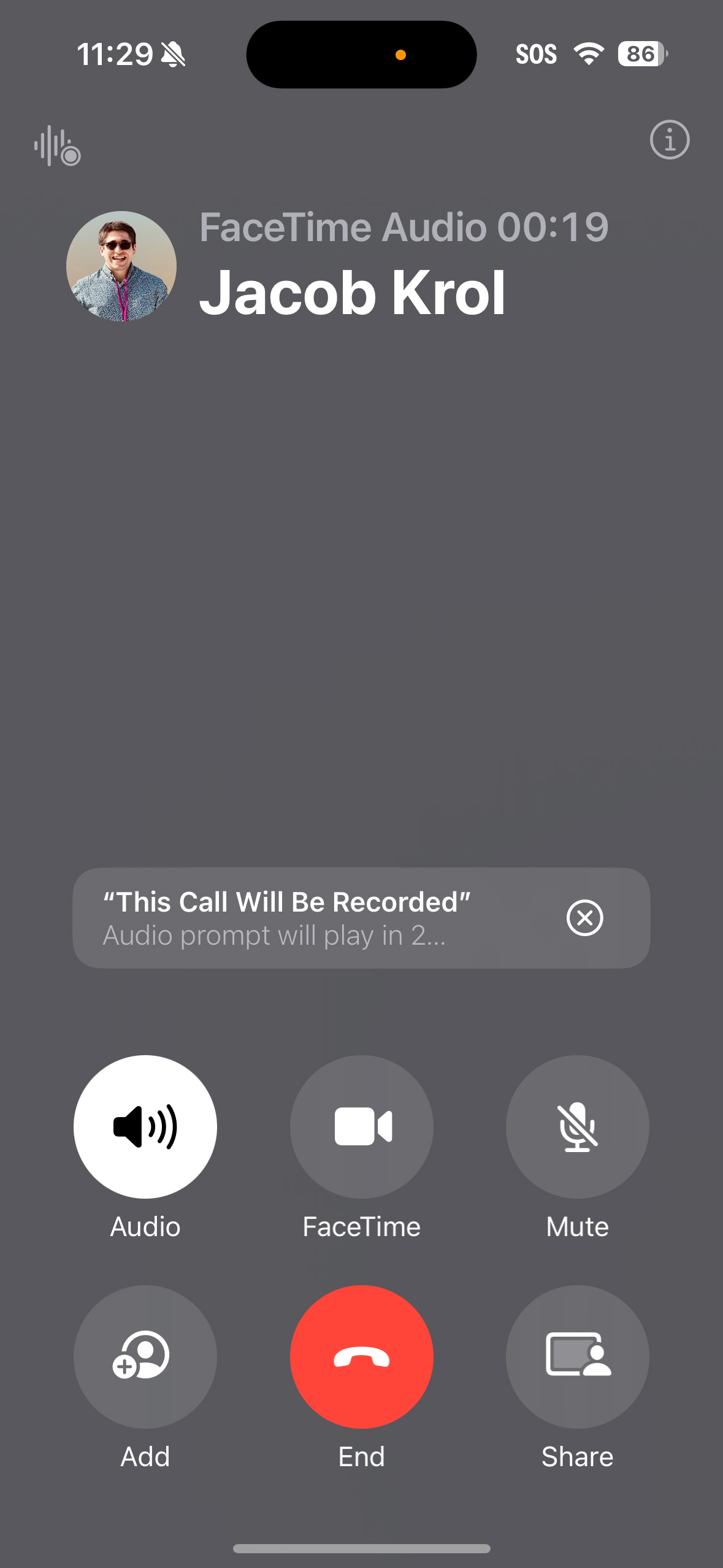

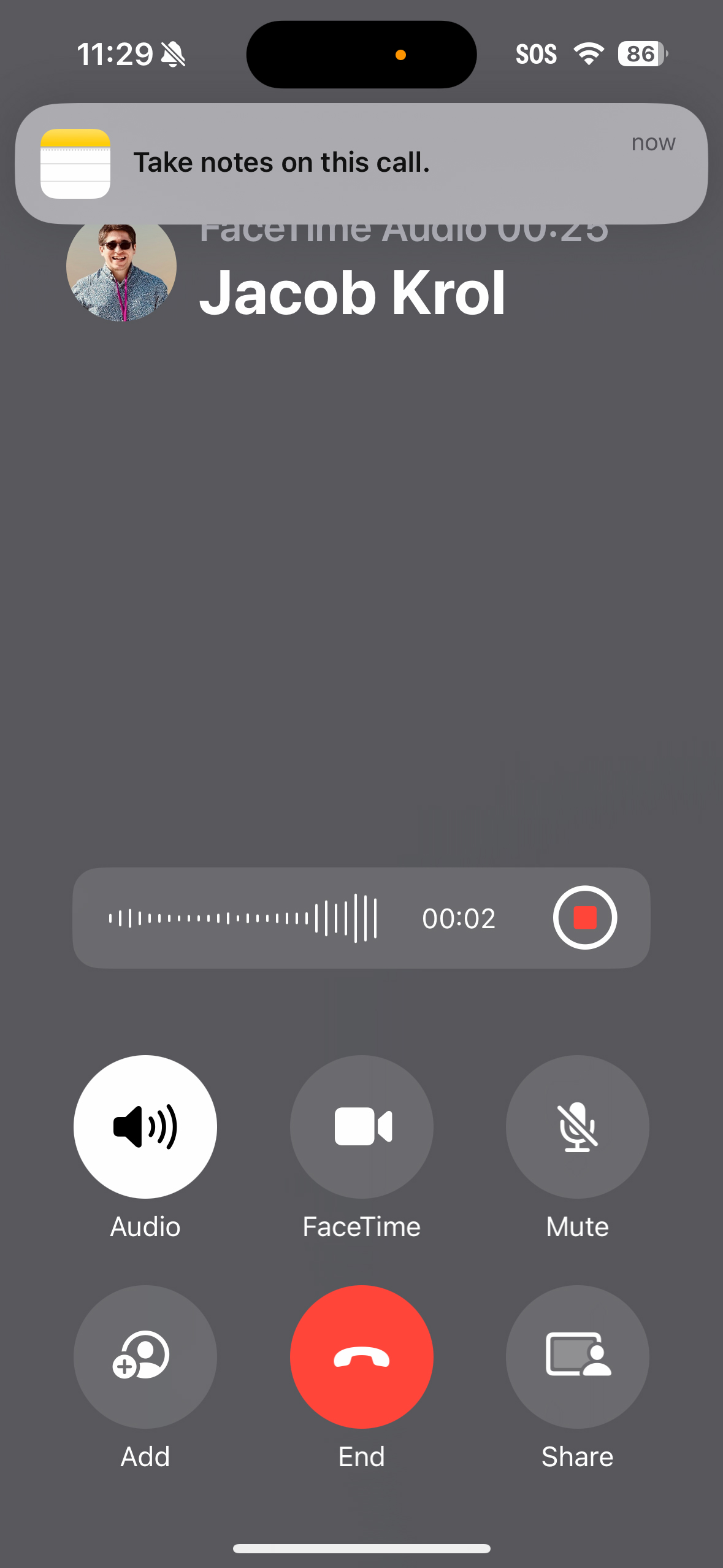

As a young cub reporter in the late 1980s, I used to attach a special microphone to the back of my handset. It snaked back to a tape recorder, capturing the audio for both sides of my interview. Today, we make calls on our smartphones that make it easier to capture audio. Perhaps none, though, will make it as frictionless as the new Phone call recording and transcription in iOS 18.1 dev beta.

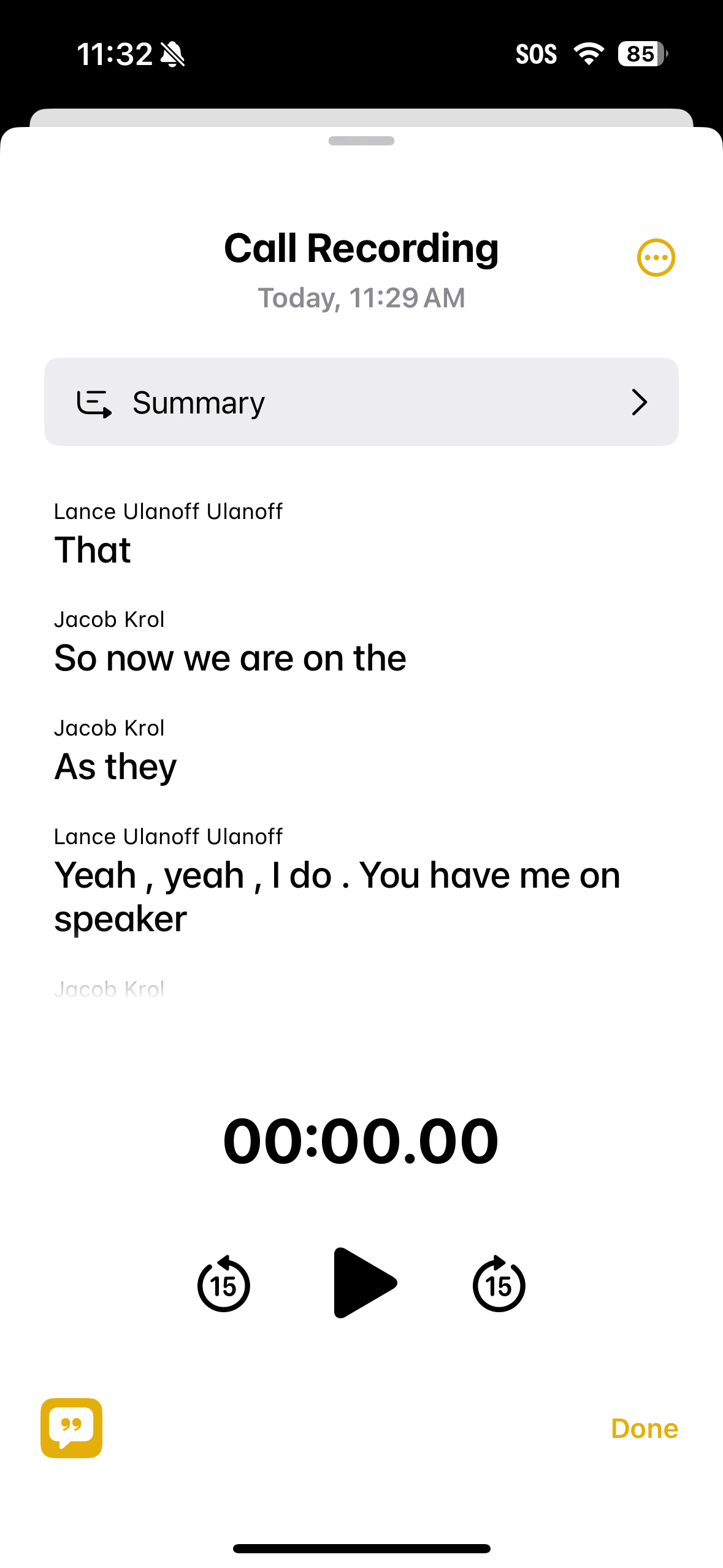

I conducted a brief two-minute call with my colleague, Jake. I initiated the call and clicked a little icon in the upper lefthand corner that let me record it. For transparency, the system announced to Jake that the call was being recorded. It also automatically captured the audio in the Notes app, where, within a minute after ending the call, I found a very accurate and clearly labeled transcription. I can tap on any section to replay the associated audio and search against the entire transcription.

Honestly, if this were the only iOS 18 update, I would install it just for this.

Photos: making memories

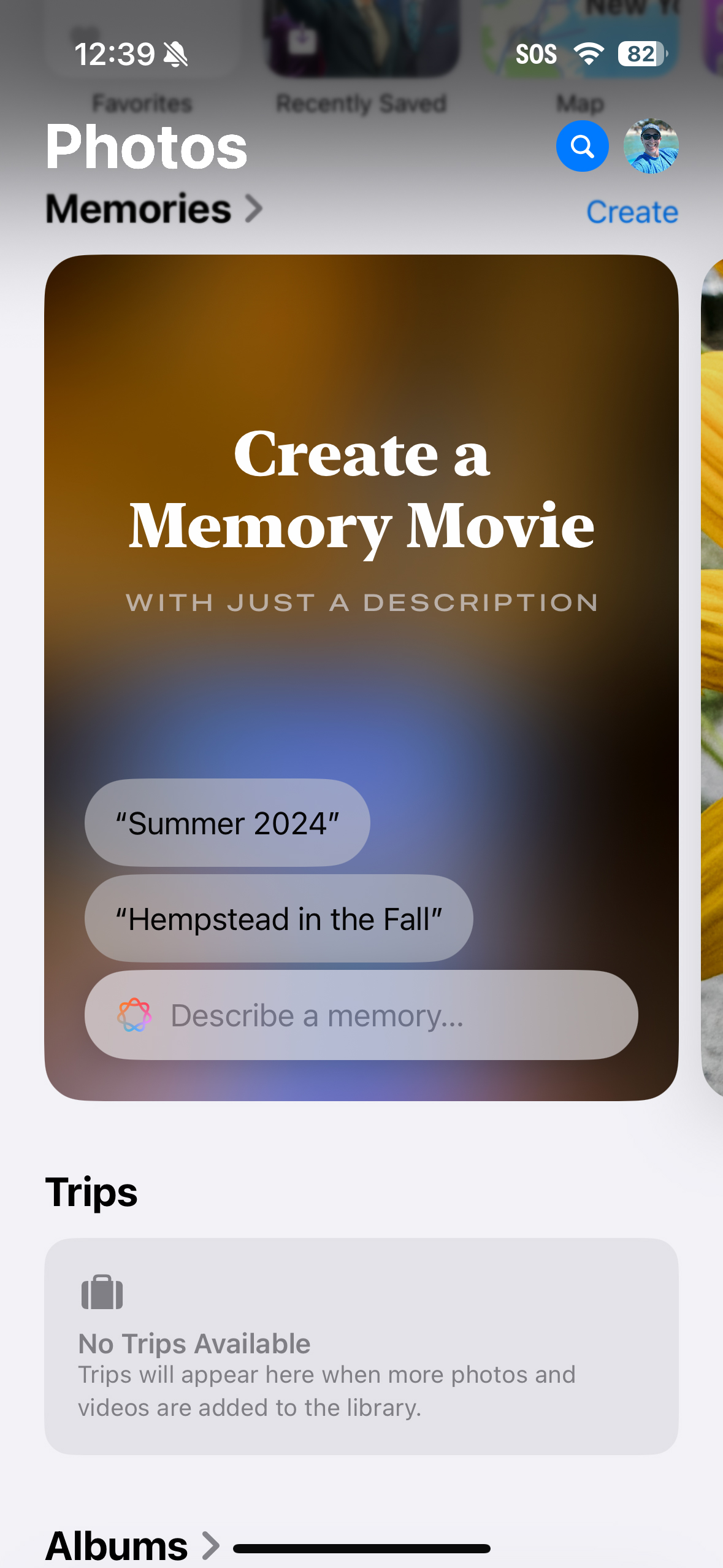

You know how your iPhone creates photo collages or memories for you without you asking for them? Sometimes, they hit the right emotional note, and you’re almost happy your phone did it. Other times, maybe not so much.

Apple Intelligence in iOS 18 combines the automation of that system with generative AI smarts to let you craft the perfect memory. At least, that’s the pitch.

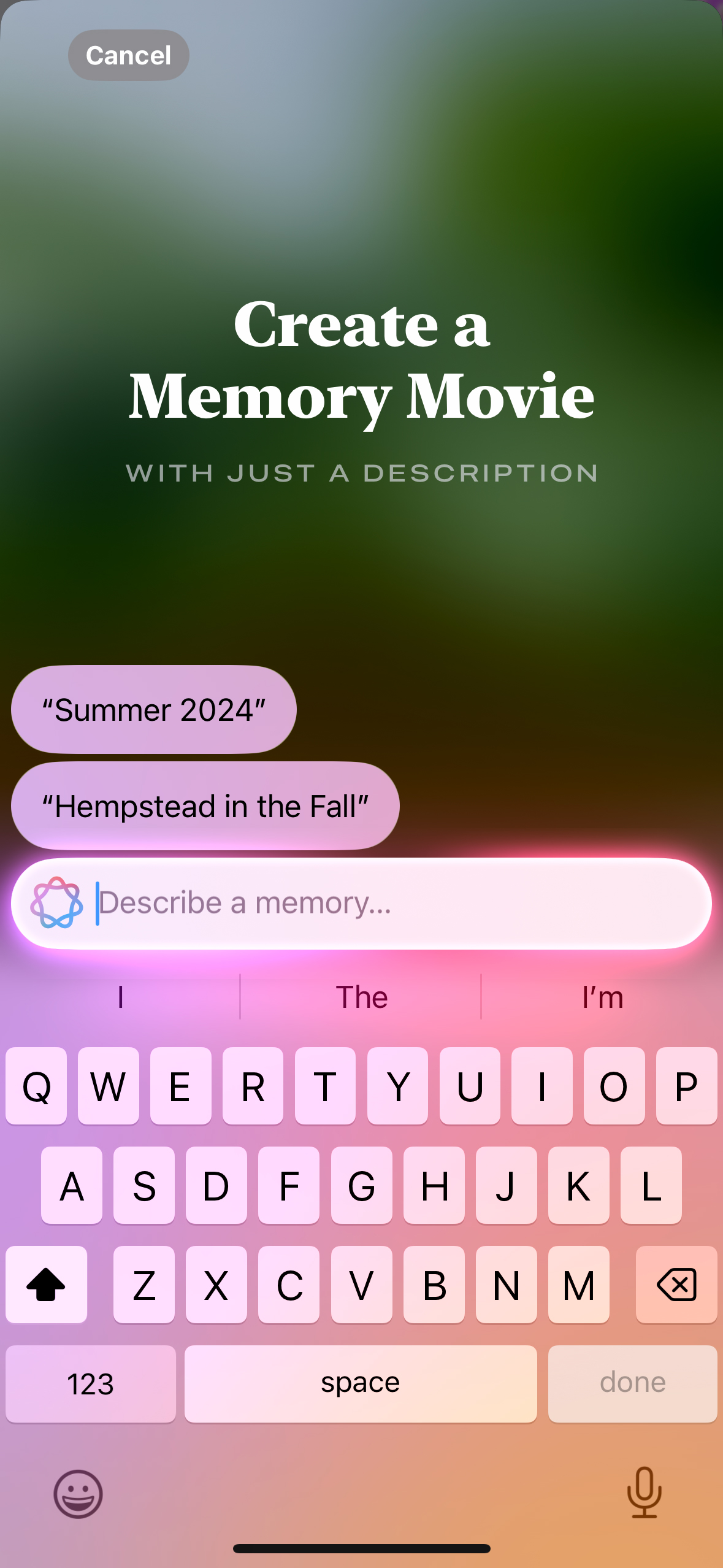

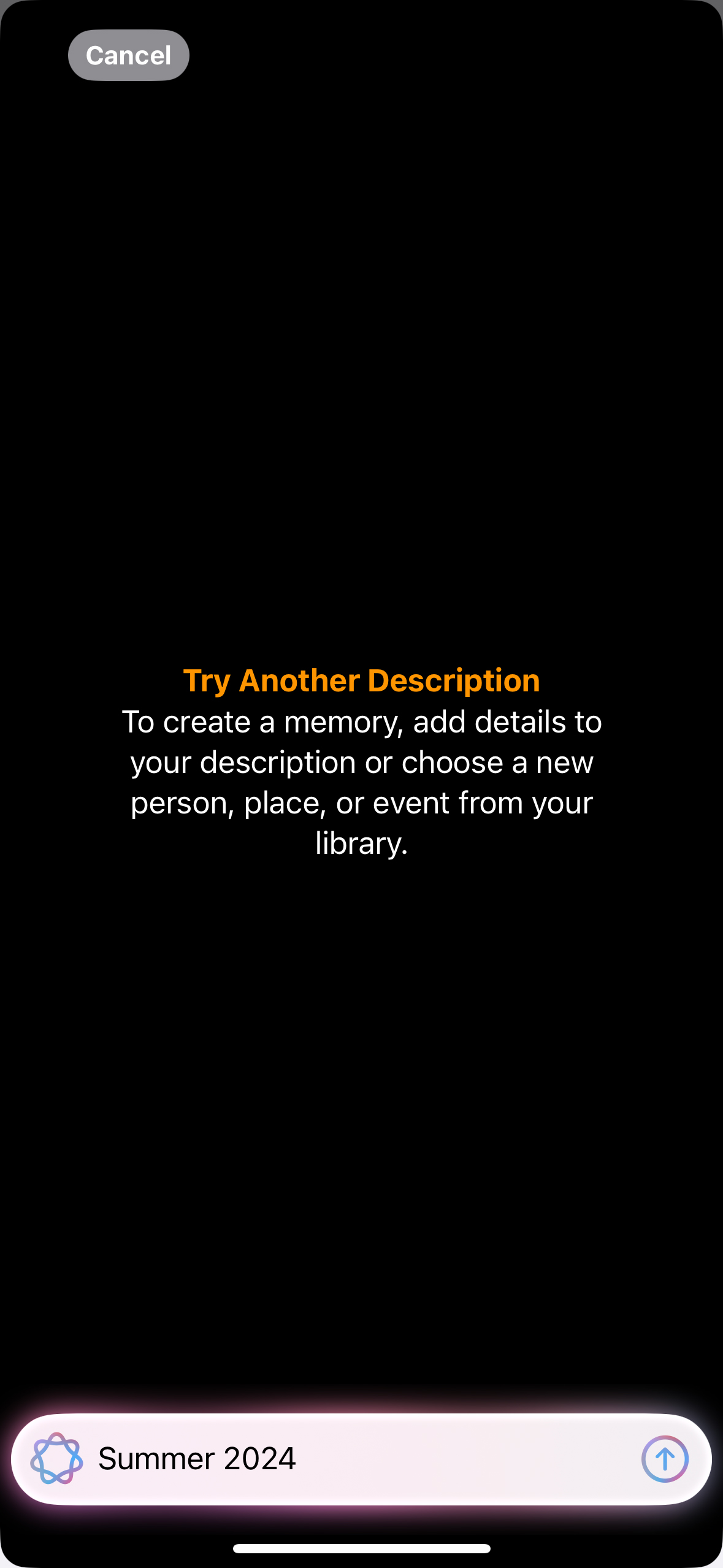

In iOS 18.1 dev beta, “Create a Memory Movie” stood ready to do my bidding, the prompt box asking me, like a psychiatrist, to “Describe a memory.” I tried various prompts, including some based on suggestions like ‘Summer 2024,” but all I got was a combination of Movie Memories that were off the mark or that did not work at all.

This is, of course, super early beta, so I’m not surprised. At least I could see how the interface operates and the “show your work” image collage animation that appears before the system plays back the memory movie (complete with music that I could alter with selections from Apple Music).

The system is also set to support natural language queries in search, which could bring Apple’s Photos app in alignment with Google Photos, where I’ve long been able to search for almost any combination of keywords and find exactly the images I need. In my very early experience, the iOS 18.1 Photos would only work when it had finished indexing all my iCloud-based photos. Since I have tens of thousands of photos, it may take a while.

As I noted above, it’s very early days for Apple Intelligence. iOS 18.1 Dev Beta is a work in progress intended for developers and not recommended for the public. It’s incomplete and subject to change. Even when the iPhone 16 arrives (maybe September) Apple Intelligence may still be under development. Some features will arrive fully baked on the new phone, others will arrive in software updates, and some could slide all the way into next year.