Hands On The launch of Microsoft’s Copilot+ AI PCs brought with it a load of machine-learning-enhanced functionality, including an image generator built right into MS Paint that runs locally and turns your doodles into art.

The only problem is that you’ll need a shiny new Copilot+ AI PC to unlock these features. Well, to unlock Microsoft Cocreate anyway. If you’ve got even a remotely modern graphics card, or even a decent integrated one, you’ve (probably) got everything you need to experiment with AI image-generation locally on your machine.

Since its debut nearly two years ago, Stability AI’s Stable Diffusion models have become the go-to for local image generation, owing to the incredibly compact size, relatively permissive license, and ease of access. Unlike many proprietary models, like Midjourney or OpenAI’s Dall-e, you can download the model and run it yourself.

Because of this, a slew of applications and services have cropped up over the past few years designed to make deploying Stable Diffusion-derived models more accessible on all manner of hardware.

In this tutorial, we’ll be looking at how diffusion models actually work and exploring one of the more popular apps for running them locally on your machine.

Prerequisites:

Automatic1111’s Stable Diffusion Web UI runs an a wide range of hardware and compared to some of our other hands on AI tutorial software it’s not terribly resource-intensive either. Here’s what you’ll need:

- For this guide you’ll need a Windows or Linux PC (We’re using Ubuntu 24.04 and Windows 11) or an Apple Silicon Mac.

- A compatible Nvidia or AMD graphics card with at least 4GB of vRAM. Any reasonably modern Nvidia or most 7000-series Radeon graphics cards (some higher-end 6000-series cards may work too) should work without issue. We tested with Nvidia’s Tesla P4, RTX 3060 12G, RTX 6000 Ada Generation, as well as AMD’s RX 7900 XT

- The latest graphics drivers for your particular GPU.

The basics of diffusion models

Before we jump into deploying and running diffusion models, it’s probably worth taking a high-level look at how they actually work.

In a nutshell, diffusion models have been trained to take random noise and, through a series of denoising steps, arrive at a recognizable image or audio sample that’s representative of a specific prompt.

The process of training these models is also fairly straightforward, at least conceptually. A large catalog of labeled images, graphics, or sometimes audio samples — often ripped from the internet — is imported and increasing levels of noise are applied to them. Over the course of millions, or even billions, of samples the model is trained to reverse this process, going from pure noise to a recognizable image.

During this process both the data and their labels are converted into associated vectors. These vectors serve as a guide during inferencing. Asked for a “puppy playing in a field of grass,” the model will use this information to guide each step of the denoising process toward the desired outcome.

To be clear, this is a gross oversimplification, but it provides a basic overview of how diffusion models are able to generate images. There’s a lot more going on under the hood, and we recommend checking out Computerphile’s Stable Diffusion explainer if you’re interested in learning more about this particular breed of AI model.

Getting started with Automatic1111

Arguably the most popular tool for running diffusion models locally is Automatic1111’s Stable Diffusion Web UI.

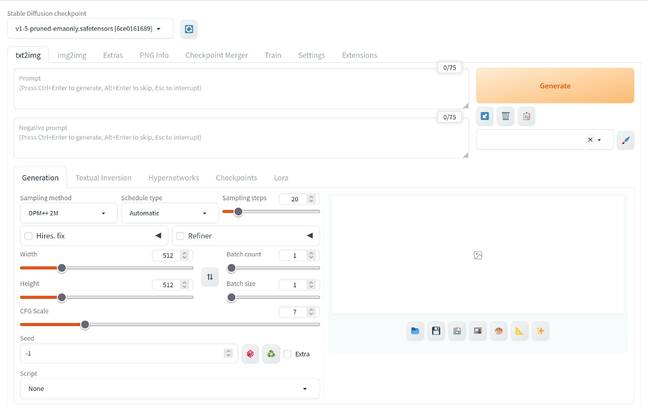

Automatic1111’s Stable Diffusion WebUI provides access to a wealth of tools for tuning your AI generated images – Click to enlarge any image

As the name suggests, the app provides a straightforward, self-hosted web GUI for creating AI-generated images. It supports Windows, Linux, and macOS, and can run on Nvidia, AMD, Intel, and Apple Silicon with a few caveats that we’ll touch on later.

The actual installation varies, depending on your OS and hardware, so feel free to jump to the section relevant to your setup.

Note: To make this guide easier to consume we’ve broken it into four sections:

- Introduction and installation on Linux

- Getting running on Windows and MacOS

- Using the Stable Diffusion Web UI

- Integration and conclusion

Intel graphics support

At the time of writing, Automatic1111’s Stable Diffusion Web UI doesn’t natively support Intel graphics. There is, however, an OpenVINO fork that does on both Windows and Linux. Unfortunately, we were unable to test this method so your mileage may vary. You can find more information on the project here.

Installing Automatic1111 on Linux — AMD and Nvidia

To kick things off, we’ll start with getting the Automatic1111 Stable Diffusion Web UI – which we’re just going to call A1111 from here on out – up and running on an Ubuntu 24.04 system. These instructions should work for both AMD and Nvidia GPUs.

If you happen to be running a different flavor of Linux, we recommend checking out the A1111 GitHub repo for more info on distro-specific deployments.

Before we begin, we need to install a few dependencies, namely git and the software-properties-common package:

sudo apt install git software-properties-common -y

We’ll also need to grab Python 3.10. For better or worse, Ubuntu 24.04 doesn’t include this release in its repos, so, we’ll have to add the Deadsnakes PPA before we can pull the packages we need.

sudo add-apt-repository ppa:deadsnakes/ppa -y

sudo apt install python3.10-venv -y

Note: In our testing, we found AMD GPUs required a few extra packages to get working, plus a restart.

#AMD GPUS ONLY sudo apt install libamd-comgr2 libhsa-runtime64-1 librccl1 librocalution0 librocblas0 librocfft0 librocm-smi64-1 librocsolver0 librocsparse0 rocm-device-libs-17 rocm-smi rocminfo hipcc libhiprand1 libhiprtc-builtins5 radeontop

# AMD GPUS ONLY sudo usermod -aG render,video $USER

# AMD GPUS ONLY sudo reboot

With our dependencies sorted out, we can now pull down the A1111 web UI using git.

git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui && cd stable-diffusion-webui

python3.10 -m venv venv

Finally, we can launch the web UI by running the following.

./webui.sh

The script will begin downloading relevant packages for your specific system, as well as pulling down the Stable Diffusion 1.5 model file.

If the Stable Diffusion Web UI fails to load on AMD GPUs, you may need to modify the webui-user.sh. This appears to be related to device support in the version of ROCm that ships with A1111. As we understand it, this should be resolved when the app transitions to ROCm 6 or later.

#AMD GPUS OMLY echo "export HSA_OVERRIDE_GFX_VERSION=11.0.0" >> ~/stable-diffusion-webui/webui-user.sh

If you’re still having trouble, checkout our “Useful Flags” section for additional tips.

In the next section, we’ll dig into how to get A1111 running in Windows and macOS.