Refresh

A more in-depth look

We’ll have more in-depth looks at the newest ChatGPT model, o1, as it makes it’s way out of preview.

For now, we broke down everything that OpenAI announced during day of its 12 Days of AI.

Check out our full article to see what o1 brings to the table, alongside the new spendy ChatGPT Pro tier.

Not sure the $200 monthly fee is worth it, but it’s great to see OpenAI investing in its technology.December 5, 2024

We’re only into day 1 of OpenAI’s 12 days of AI, but people are reacting to today’s announcements.

For the most part I saw two different reactions. 1) Concern over the $200 Pro tier price and 2) questioning who the o1 model is for.

As an example a Redditor posted about whether or not o1 is worth it, “For average users no. Maybe if you’re using it to run mathematical and scientific thing sure.”

Excitement seems tempered but it is only day 1, maybe more exciting things are to come.

OpenAI releases report on o1 model safety

As part of announcing the new o1 model, OpenAI put out the safety card for the mdoel.

If put in the hands of an expert, o1 can help them plan a biological attackBut if a non-expert tried, it wouldn’t be much help.

They don’t think it can build a nuclear bomb but couldn’t test it fully as details on doing so are classified.

It was rated medium risk and they had to put additional safeguards in place. For example, it was better at persuading people to say something than other models and better at convincing people to give money (although it raised less money overall, it convinced more people to donate).

Sam Altman announces “smartest model in the world”

we just launched two things:o1, the smartest model in the world. smarter, faster, and more features (eg multimodality) than o1-preview. live in chatgpt now, coming to api soon. chatgpt pro. $200/month. unlimited usage and even-smarter mode for using o1. more benefits to come!December 5, 2024

On X, OpenAI CEO Sam Altman announced the new o1 model calling it the “smartest model in the world.”

He teased that more would be coming, presumably teasing the next 11 days of AI.

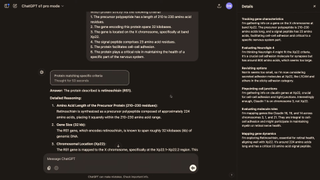

What’s new in ChatGPT o1?

In OpenAI’s reveal of the o1 model Altman and crew said that we can “expect a faster, more powerful and accurate reasoning model that is even better at coding and math.”

The o1 model can now reason through image uploads and explain visual representations with “detail and accuracy.”

Additionally, it was said that the model is more “concise in its thinking” resulting in faster responses.

Based on a press release, it appears that o1 is available now for all paid ChatGPT subscribers. Additional features will come online later, but it was not said specifically when that would be.

OpenAI introduces higher ChatGPT paid tier – ChatGPT Pro

During the first of 12 livestreams, OpenAI introduced a new tier for paid subscribers of ChatGPT called ChatGPT Pro.

This tier gives subscribers access to a slightly more powerful version of newly announced ChatGPT o1 model, called o1 Pro.

A price was not revealed, though there are rumors that it will cost $200 annually.

The Pro mode seems specifically geared toward more science and math based applications as the stiff presentation showed the model working on history and thermodynamics related questions.

OpenAI unveils its impressive o1 reasoning model

OpenAI revealed the full version of its o1 reasoning model during the first of 12 livestreams its doing over the next two weeks. o1 is an incredibly powerful model currently only available in preview. The full version will be able to analyze images, code and text.The slightly wooden tech preview included details of ways scientists could use the model to discover new technologies and improvements to speed, ensuring it no longer takes 10 seconds to say hello.AI Editor Ryan Morrison said: “o1 hasn’t just been given an intelligence boost, but also a performance boost. It will think more intelligently before responding to a user query. This is significant in ways we won’t truly appreciate until we start using it.”

Day 1: Full version of o1, ChatGPT Pro

OpenAi’s 12 Days of AI is off and Day 1 is introducing some big changes to ChatGPT.

First up is the full version of ChatGPT o1 which is a “smarter, faster, multi-modal and with better instruction following.”

Overall, o1 hasn’t just been given an intelligence boost, but also a performance boost. It will think more intelligently before responding to a user query. For example, if you just say “hi” it won’t spend ten seconds thinking about how to reply.

Many of the examples provided Altman and crew have been specifically science based.

On top of the newest version of ChatGPT, OpenAI is introducing a new tier for paid subscribers called ChatGPT Pro. Paying for this service gives you unlimited access to o1 including advance voice mode and a slightly more powerful version of the o1 model.

And we’re off — the first live stream of the 12 days

OpenAI is live with its first live stream of the season. Over the next two weeks, there will be 12 streams marking 12 announcements. Some big, some small, all about AI.

You can watch along with the stream on the OpenAI website and we’ll have all the details here.

What can we expect over the next two weeks?

OpenAI has a big bag of tricks. This includes voice, video, text and coding models it has yet to release. We don’t even have the full version of GPT-4o where it can analyze music, video and even create images on its own without sending requests off to DALL-E.

Here are some of the things I expect to see over the time, although I have no idea if, or in what order they might be released:

- Sora

- o1 model

- Advanced Voice Vision

- GPT-4o image generation

- Canvas upgrades

- Video analysis in ChatGPT

- Voice Search

OpenAI’s o2 could do more than we ever thought possible

OpenAI’s o1 model enhanced reasoning capabilities through deliberate problem-solving processes, among other integrations. So, what’s next? We are anticipating the upcoming o2 model to further elevate AI’s ability to accomplish complex tasks while offering more contextually relevant outputs. In other words, o2 will be sharper and faster with a more refined chain-of-thought processing.

The o2 model is expected to offer more sophisticated reasoning algorithms than its predecessor. We could see that this newer model is better equipped to tackle intricate problems such as advanced mathematics, scientific research, and challenges that come with complex coding.

In terms of safety and alignment, o2 is likely to build upon the frameworks established by o1, while further incorporating advanced measures to ensure responsible AI. This could include improved adherence to safety protocols and a better understanding of ethical considerations.

It’s clear that OpenAI aims to alleviate potential risks associated with high-level AI reasoning capabilities and we could see further evidence of that with the next model.

OpenAI’s Canvas could become free for all users

The coding and writing experience could see an update from OpenAI’s Canvas, the new interface within ChatGPT designed to enhance collaboration. Different than the traditional chatbot, Canvas opens a separate window where users can directly edit text or code, highlight specific sections for focused feedback, and receive inline suggestions from ChatGPT.

This interactive approach is a dream come true to content creation, enabling users to refine their projects with better precision and context awareness. We are sure to see a suite of updates to the shortcuts tailored to streamline functionalities for coders like code review, bug fixing and translating code.

Writers can expect features that offer adjustments to document lengths and modifications to reading levels to help further polish their work. Grammar and clarity features will be included as well.Currently in beta, Canvas is accessible to ChatGPT Plus and Team users, but we’re guessing there will be plans to extend the availability especially once it exits the beta phase.

Tom’s Guide publisher strikes a deal with OpenAI

Future Plc, The publisher of Tom’s Guide, TechRadar, Marie Claire and over 200 specialist media brands has struck a deal with OpenAI to bring some of that expert content into ChatGPT.

“Our partnership with OpenAI helps us achieve this goal by expanding the range of platforms where our content is distributed. ChatGPT provides a whole new avenue for people to discover our incredible specialist content,” said Future CEO Jon Steinberg.

Next time you’re looking for a review of a great laptop or help cleaning your air fryer, you’ll be able to find links to some of our best content inside ChatGPT. You can already search for me, just type Ryan Morrison, Tom’s Guide into ChatGPT Search and it will give you my work history and links to some of my best stories.

More news about ‘Operator’ news could be coming

Another highly anticipated announcement from OpenAI is more information about what to expect from the company’s latest AI agent codenamed ‘Operator.’ This AI agent is designed to perform tasks on behalf of users. From writing code to booking an airplane ticket, Operator is said to enable ChatGPT to execute actions from a web browser rather than simply providing information.

This significant advancement in AI capabilities aligns with OpenAI’s broader strategy to integrate AI more deeply into daily activities, which will undoubtedly enhance user productivity and efficiency. Of course, this move also keeps OpenAI competitive among rivals like Google and Anthropic, who are also exploring autonomous AI agents to automate complex tasks.

As OpenAI prepares for Operator’s launch, we are eagerly anticipating the potential for more applications and the implications of this groundbreaking technology.

The introduction of Operator is expected to transform how users interact with AI, shifting from passive information retrieval to active task execution.

Advanced Voice might soon be able to see you

OpenAI is preparing to enhance ChatGPT’s capabilities by introducing a “Live Camera” feature, enabling the AI to process real-time video input. Initially demonstrated earlier this year, ChatGPT’s ability to recognize objects and interpret scenes through live video feeds is probably closer than we think. This advancement will allow ChatGPT to analyze visual data from a user’s environment, providing more interactive and context-aware responses.

While currently speculation, recent analyses of the ChatGPT Android app’s beta version 1.2024.317 have uncovered code references to the “Live Camera” functionality. These findings suggest that the feature is nearing a broader beta release. The code indicates that users will be able to activate the camera to let ChatGPT view and discuss their surroundings, integrating visual recognition with the existing Advanced Voice Mode.

While OpenAI has not provided a specific timeline for the public rollout of the Live Camera feature, its presence in beta code implies that testing phases are underway. Users are advised not to rely on the Live Camera for critical decisions, such as live navigation or health-related choices, underscoring the feature’s current developmental status.

The integration of live video processing into ChatGPT represents a significant step toward more immersive AI interactions. By combining visual input with conversational AI, OpenAI aims to create a more dynamic and responsive user experience, bridging the gap between digital and physical environments.

A look ahead to future updates?

Over the next 12 days I’m expecting new products, models and functionality from OpenAI. Within that I also suspect we’ll get some ‘previews’ of products not set for release until the new year.

A lot of what’s being announced is likely to be available to try, if not today, in the coming weeks but it wouldn’t be an open AI announcement if it didn’t include some hints at what is yet to come.

So what might be announced but not released?

One safe bet in this area is some form of agent system — possibly Operator — as this is something every other AI company is also working on. This would allow ChatGPT to control your computer or perform actions on your behalf online.

Google has Jarvis coming and Anthropic has Claude with Computer Use. Agents are the next step towards Artificial General Intelligence.

I suspect we may also get a hint at a next-generation model or a direction of travel for future models as we move away from GPT and into o1-style reasoning models.

Slightly more out there as an idea is a potential new name for ChatGPT. OpenAI reportedly spent millions on the chat.com domain name, so it may move in that direction.

Letting Advanced Voice free on the internet

Advanced Voice is one of the most powerful of OpenAI’s products. Not just because of its ability to mimic natural speech and respond as naturally as a human, but for the fact it is also available to developers to build into smart speakers or call centers.

Being powerful doesn’t mean it is perfect. It can be improved. This could include giving it live search access. Right now if you talk to AV it can only respond based on information either in its training data, its memory or what you’ve told it during that conversation.

Giving Advanced Voice access to search would make it significantly more useful as you could use it check the weather, stocks, sport scores or the latest news updates.

I’d like to see it go even further and give Advanced Voice access to my calendar, emails and messages so I can have it respond on my behalf. This is something Siri should be able to do and Gemini Live ‘could’ potentially do — so its only a matter of time.

o1 to get a full release with access to more features

OpenAI announced o1, the “reasoning model” earlier this year. It was previously known as Project Strawberry and even Q*. Unlike GPT-4 or even Claude 3.5 Sonnet, o1 spends time working through a problem before presenting a response.

It is much slower than using other models but its great for more complex tasks. It is also good for planning and project work as it can present a complete, well-reasoned report. So far we’ve only seen o1 as a preview model or a mini version. We’re expecting the full release this week.

There are some hints that the announcement will actually be bigger than just the full version of o1, with rumors pointing to a new combined model that takes the best features of GPT-4o and merges them with the reasoning of o1.

This could see o1 get access to image generation, search and data analysis. Having a reasoning model able to work with the full range of tools is a significant upgrade.

Sora: The movie-making AI machine

When Sora was first revealed earlier this year it was so far ahead of the competition that it shook the scene. This included AI creators and Hollywood filmmakers alike. It could generate multiple scenes from a single prompt in high resolution. At the time the best models available could do between 2 and 5 seconds showing a single shot.

Of everything OpenAI could announce during its “12 days” event, Sora is probably the most hotly anticipated. It is however less impressive than it would have been six months ago, as we’ve now got models like Hailuo MiniMax, Runway Gen-3 and Kling.

That said, it’s still a big deal, and the recent leak points to it still being at the top of its game. None of the current models are able to generate more than 10 seconds from a single prompt and rumors suggest this is possible with Sora.

We might not get ‘full Sora’ this week though. I’ve heard that a new Sora-turbo model could be what comes from the 12 Days of OpenAI event. This will be closer in capability to the likes of Kling and Runway than ‘full Sora’ with shorter initial clips and less control.

There will be some ‘stocking stuffers’

Sam Altman made it clear that not all of the announcements over the 12 Days of OpenAI will be big products, models or upgrades. He said there will be some “stocking stuffers,” referring to those gifts from Santa that help pad out the presents.

U.S. TV shows with more than 20 episodes in a season are famous for them — we’ve all got episodes we regularly skip when re-watching a show. I used to get an orange wrapped in aluminum foil in my stocking each year, between the gifts.

In the context of 12 Days of OpenAI I suspect “stocking stuffers” will refer to either very small changes to the way ChatGPT works (improved search or memory management) or something for developers such as cheaper API calls. It could even be a safety blog.

A billion messages are sent every day in ChatGPT

Fresh numbers shared by @sama earlier today: 300M weekly active ChatGPT users1B user messages sent on ChatGPT every day1.3M devs have built on OpenAI in the USDecember 4, 2024

ChatGPT is only two years old and it was launched as a research preview. The intention was to show how you could use GPT-3 for conversation but it took off and is one of the fastest-growing consumer products in history — and it keeps getting bigger.

Fresh numbers shared by Sam Altman show more than a billion messages are being sent in ChatGPT every single day from more than 300 million active users.

A large part of that is coming from free users, with new features being made available every day including Advanced Voice and search. It will be interesting to see how many of the new features being announced over the next two weeks will be available for free.

Merry ‘Shipmass’ to everyone in AI land

December is supposed to be a time when things begin to calm down on the run up to the holiday break, not so if you cover artificial intelligence. Last year we had Google launch Gemini in December and this year OpenAI is going all out with 12 days of shipmass.

Sam Altman is turning into Sam-ta Clause between now and mid-December, finally opening the toy chest that has been kept under lock and key, only to be played with by OpenAI employees and a few ‘invited guests’.

I’m obviously most excited to try Sora, but there’s a lot the AI lab has been sitting on for the past year and a half and we’ll have all the details right here.